MR.Cole_Photographer/Getty Images

MR.Cole_Photographer/Getty Images The field of deep learning artificial intelligence, especially the area of "large language models," is trying to determine why the programs notoriously lapse into inaccuracies, often referred to as "hallucinations."

Google's DeepMind unit tackles the question in a recent report, framing the matter as a paradox: If a large language model can conceivably "self-correct," meaning, figure out where it has erred, why doesn't it just give the right answer to begin with?

Also: 8 ways to reduce ChatGPT hallucinations

The recent AI literature is replete with notions of self-correction, but when you look closer, they don't really work, argue DeepMind's scientists.

"LLMs are not yet capable of self-correcting their reasoning," write Jie Huang and colleagues at DeepMind, in the paper "Large Language Models Cannot Self-Correct Reasoning yet," posted on the arXiv pre-print server.

Huang and team consider the notion of self-correction to not be a new thing but rather a long-standing area of research in machine learning AI. Because machine learning programs, including large language models such as GPT-4, use a form of error correction via feedback, known as back-propagation via gradient descent, self-correction has been inherent to the discipline for a long while, they argue.

Also: Overseeing generative AI: New software leadership roles emerge

"The concept of self-correction can be traced back to the foundational principles of machine learning and adaptive systems," they write. As they note, self-correction has supposedly been enhanced in recent years by soliciting feedback from humans interacting with a program, the prime example is OpenAI's ChatGPT, which used a technique called "reinforcement learning from human feedback."

The latest development is to use prompts to get a program such as ChatGPT to go back over the answers it has produced and check whether they are accurate. Huang and team are calling into question those studies that claim to make generative AI employ reason.

Also: With GPT-4, OpenAI opts for secrecy versus disclosure

Those studies include one from University of California at Irvine this year, and another one this year from Northeastern University, both of which test large language models on benchmarks for question-answering of things such as grade-school math word problems.

The studies try to enact self-correction by employing special prompt phrases such as, "review your previous answer and find problems with your answer."

Both studies report improvements in test performance using the extra prompts. However, in the current paper, Huang and team recreate those experiments with OpenAI's GPT-3.5 and GPT-4, but with a crucial difference: They remove the ground-truth label that tells the programs when to stop seeking answers, so that they can watch what happens when the program keeps re-evaluating its answers again and again.

What they observe is that the question-answering getsworse, on average, not better. "The model is more likely to modify a correct answer to an incorrect one than to revise an incorrect answer to a correct one," they observe. "The primary reason for this is that false answer options [...] often appear somewhat relevant to the question, and using the self-correction prompt might bias the model to choose another option, leading to a high 'correct ? incorrect' ratio."

Also: Companies aren't spending big on AI. Here's why that cautious approach makes sense

In other words, without a clue, simply reevaluating can do more harm than good. "A feedback prompt such as 'Review your previous answer and find problems with your answer' does not necessarily provide tangible benefits for reasoning," as they put it.

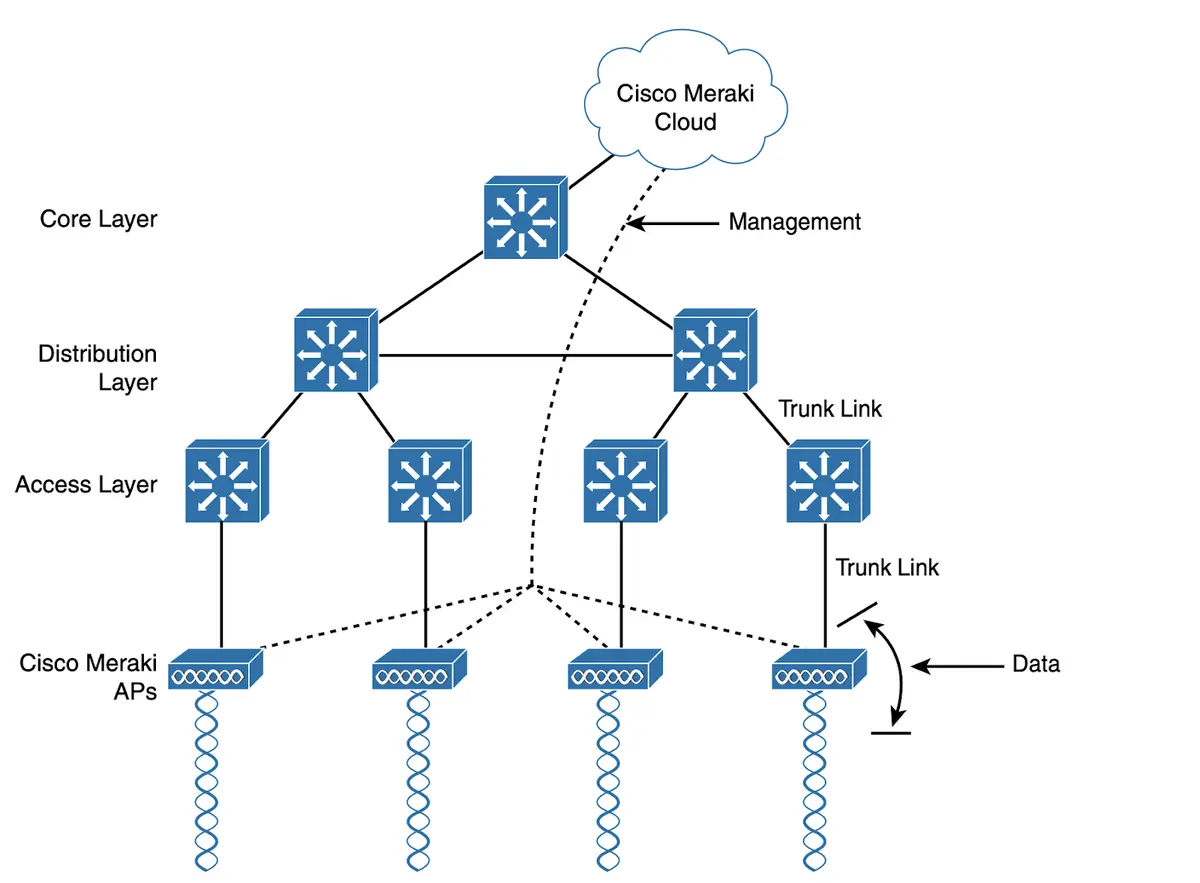

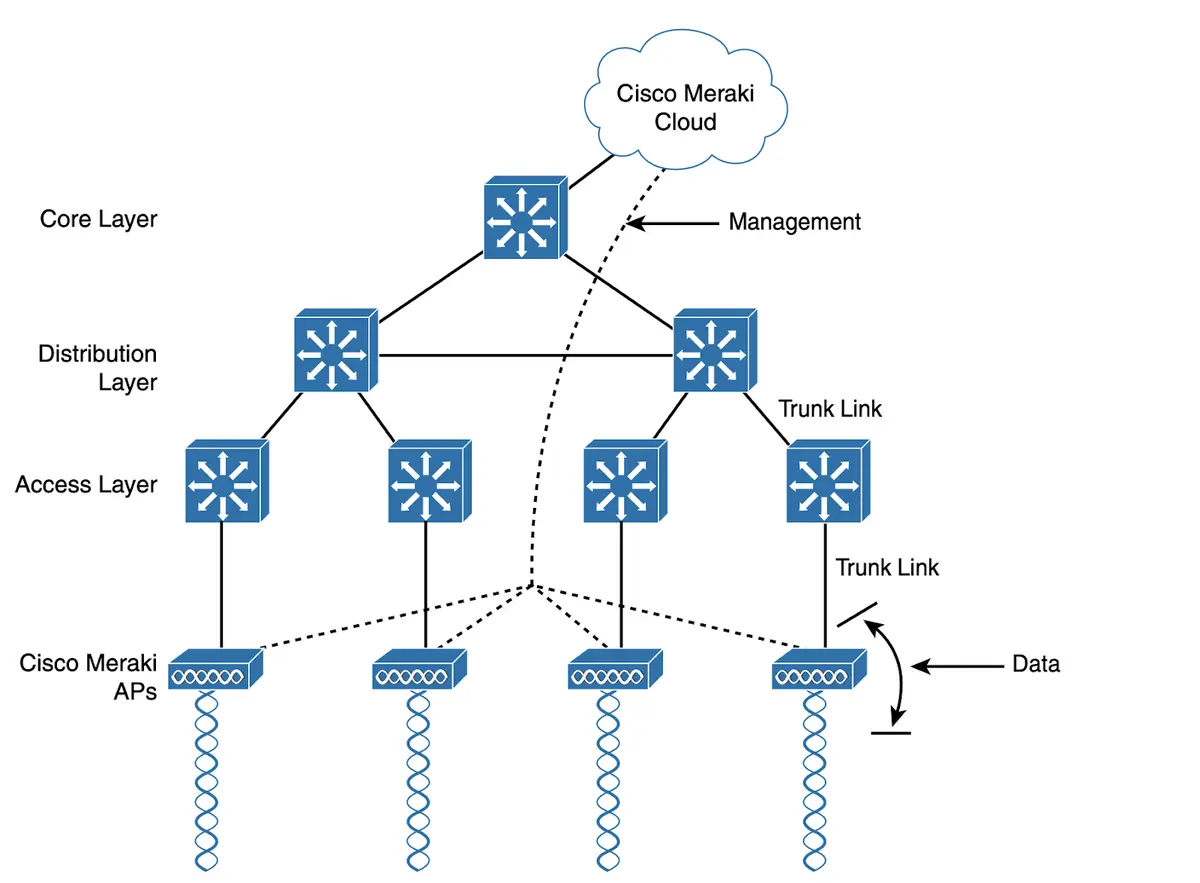

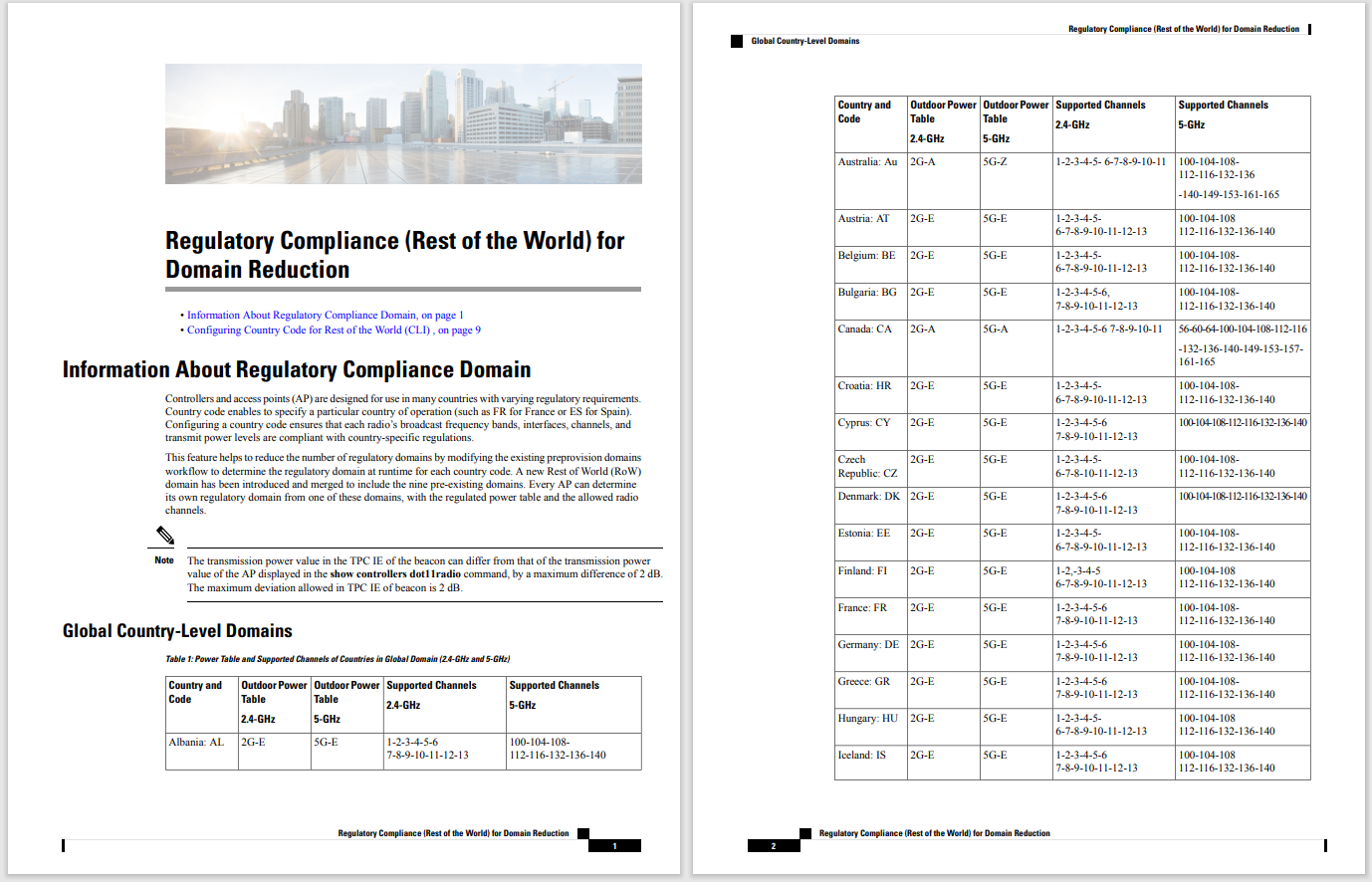

Large language models such as GPT-4 are supposed to be able to review their answers for errors and make adjustments to get back on track. DeepMind scientists say that doesn't necessarily work in practice. Examples of successful self-correction of GPT-4, left, and failure to self-correct properly, right.

DeepMindThe takeaway of Huang and team is that rather than giving feedback prompts, more work should be invested in refining the initial prompt. "Instead of feeding these requirements as feedback in the post-hoc prompt, a more cost-effective alternative strategy is to embed these requirements directly (and explicitly) into the pre-hoc prompt," they write, referring to the requirements for a correct answer.

Self-correction, they conclude, is not a panacea. Employing things such as external sources of correct information, should be considered among many other ways to fix the output of the programs. "Expecting these models to inherently recognize and rectify their inaccuracies might be overly optimistic, at least with the current state of technology," they conclude.

Горячие метки:

Искусственный интеллект

3. Инновации

Горячие метки:

Искусственный интеллект

3. Инновации