This blog was co-written by Dave Ward, SVP, CTO of Engineering and Chief Architect, Carlos Pignataro, Cisco Distinguished Engineer, and Carole Gridley, Sr. Director, Cisco Sustainable Impact.

"If you want to go fast, go alone; but if you want to go far, go together."

Proverb, believed to be of African origins

Techno-Conservation, as we've coined the term, is a new way of describing the role of technologists working on conservation. There are already a large number of academics, rangers and conservationists who are conservation-technologists (i.e., using technology to gather data and solve conversation problems.) But there are many fewer engineers, coders, and architects who are, at the same time, looking from the other side on how to innovate technology for conservation. How can we build better, more fit-for-purpose or effective technology and products for protecting our ecosystems and species. The industry trying to help and use their products and resources and innovation of technology for conservation and sustainability is so fractured and disorganized; it's ripe for consolidation and disruption. Today, the technological pieces of the puzzle don't fit together, and need more than Open APIs and software integration. We are trying to move Cisco's innovation programs and resources and the use of our product portfolio to solve the toughest problems and use our technology for a sustainable impact. Tackling the issues affecting people, society, and our planet. In our innovation and engineering teams, we have organized the effort establish and further the intersection between technology and conservation.

No doubt, there are a multitude of coders and engineers coming together to try and save species, ecosystems, and better people's lives. Some great communities we've engaged with are SmartParks and WildLabs. There are of course many, many small communities and companies that do specific tasks in the techno-conservation field, but there's almost no way to avoid bespoke or one-off deployments given there is no notion of an integrated -or more importantly -integrate-able architecture. The ability for an individual or small group to have a big impact rests on the ability to fit the piece of the puzzle they specialize in and have it work with the other pieces of the system. This is where our thinking comes in. We specialize in communications networks, data processing infrastructure, collaboration and security -all of which are needed as a foundation to building a conservation solution. We don't build everything, no one does; consequently, the obvious step is to engage and enable every coder, company, academic, entrepreneur, ranger, conservationist, and technologist to be able to be easily integrated into a platform ... that ... just ... works.

Just to finish up this introduction, why is technology so key to conservation, sustainability and the understanding of nature? The species, ecosystems, and changes to our planet require lots and lots of data monitoring to distill insight, vast data sets including not only data from the sensors deployed, but also remote sensed data from satellites, drones, airplanes, more and more complex analytical algorithms to derive the insights from the data and both post-processing (map-reduce) and real time processing (flood or encroachment alert). Dealing with vast land and ocean areas, migrating and moving animals, poaching pressures, and changing patterns of ecosystems due to climate change is simply a monumental task. You have to use all the technological tools you can, to try and find answers, insights, patterns, and solutions.

Conservationist, ecologists, academics, rangers, parks, land-owners, and technologists have to come together as none of us are specialists in all disciplines necessary to solve these pressing problems.

Last May, Cisco and Ecole Polytechnique held its 4thAnnual Research and Innovation Symposium focusing this year on how digital technologies could transform the conservation industry. We welcomed over 80 academics, conservationists, technologists and businesses to learn and share their expertise with the rest of the field. Read more about the Symposium in Part One of this Techno-Conservation Series.

With so many world-renowned experts from diverse disciplines together, Cisco's Sustainable Impact program held a "Technology & Conservation Workshop"the day after the Symposium with several key partners to help drive further Techno-Conservation Architectural alignment. In this workshop, the team analyzed and explored four main areas:

One of the key goals of this workshop for Cisco was to present and validate a new reference architecture, one built for conservation but also to be scaled to future verticals. The notion of a communications network that can handle any sensor depending on the power, distance, amount of data mobility of the animal or thing being monitored, real-time or post processing seemed applicable to so many sustainability and straight-up business problems. This architecture brought together the different streams of work, starting to converge the Techno-Conservation industry. Really to de-fracture the industry and see if there is a design and deployment set of guidelines of a communications network and hybrid-cloud-based data collection and processing architecture. Do the use cases really differ by type of sensors and analytics/visualization? Or are there fundamental differences in deployment that drive the bespoke nature of solutions?

Again, our hypothesis is that different sensors, analytics, and visualization (e.g., Operator portals) were really the major differences. Not the comms network, security design, or data collection. We've found in our experience that a focus of the problem-to-be-solved, ecosystem to be deployed in, operations centers and skills of the effort is fundamentally different: not the technology.

So whether you are monitoring animals, securing an ecosystem or park, working on improving human-wildlife-conflict (HWC), in an ocean or on land, there are key foundational blocks of the infrastructure that are common.

An obvious step was to get our University, Industry, and Conservation partners into a room. It's been fascinating over the last 4+ years working on conservation efforts how rare it is to have everyone at the same table. We just weren't communicating and all missing an opportunity at the lack of coordination. Yes, we realized it's very common for Silicon Valley companies to be like seagulls; but we are in it for the long haul. We don't want to donate tech or money and fly away. We don't want to just have a marketing campaign about saving species and the world. In CTAO we roll up our sleeves and go deep. Creating a target to discuss with theConservation Reference Architecturewas a key catalyst for this communities discussion. It is the synthesis of extensive research, development, and deployment experience that we all shared. This isn't the full architecture that include an operations center, turning rangers and conservationist's truck into hotspots, or discussing any specific sensor (e.g., collar, camera, or many others), but the basic architecture of how to attach sensors to a communications network and get the data to a hybrid-cloud collector and analytics platform to process the data. The ranger, academic, or conservationists, JOCC (joint operations control center) is for another conversation.

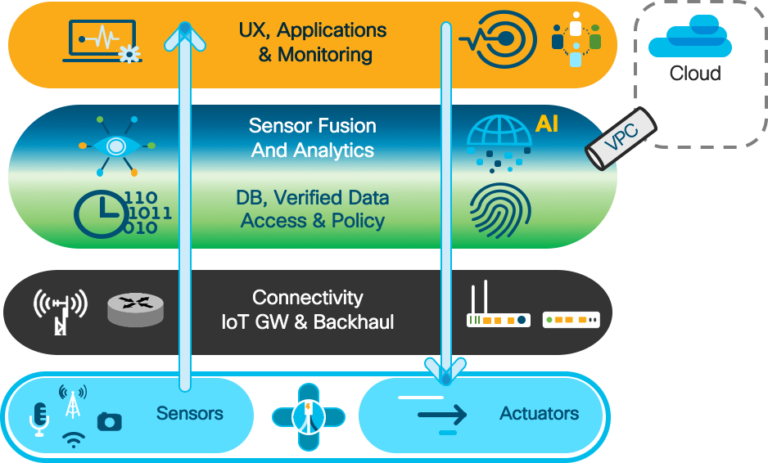

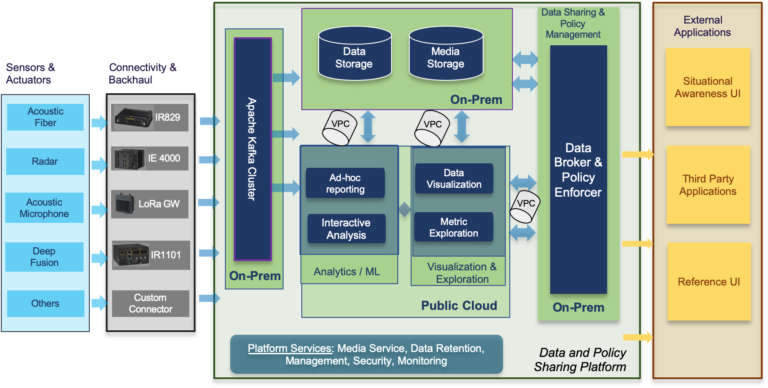

This layered architecture demonstrates, within each of the layers, a distinct boundary of technology and functionality, while the arrows represent the flow of data. When we look at the outcomes, we can see how device raw data, sent through a connectivity mesh, is turned into policy-driven information, growing in context, and via advanced analytics reaches the proactive conservation as the main outcome. The really interesting part of the architecture is that obvious points and layers of integration occur. There's a sensor layer where technologists and others building sensors can "run" as fast as possible and "plug into" (yes, often via radios) a communications network. That connectivity layer is a full "AnyNet" meaning all radios, wired connections towards the sensor or client are operated and managed as one network. Regardless of the access technology type. I need to take a tangent here as this is a fundamental change to the Internet Architecture that we are innovating. In the past, your access technology type used to get on the Internet defined your services and security. So, if you had a bunch of different radios, they were all operated, managed and delivery ships-in-the-night and considered completely separate networks. Ugh. Our goal was to allow a sensor-specialists to build the best sensor and our job is to get it on the Internet via the best means possible and make it "one network" to operate and manage. It was also to be able to connect a wide variety of sensors of different capabilities. Some which can process data themselves (e.g., cameras) and some that are just beacons or attached to animals.

Above the communications network to the sensors, there's a backhaul network (often point to point, WiFi, or satellite) that gets the data (streaming, discrete, event-driven, etc.) back to the data collector, say in a JOCC. There can be a single park, park of parks, regional JOCCs; the model fits. Any real time processing, events, alerts, threats and data deduplication, aggregation, pre-processing would occur "on prem." Also, some fusion of the data and Artificial Intelligence (AI) is applied to handle the relationship and triggers from the different sensors. A fence sensor is triggered that there is digging or a cut, that causes a camera to be trained, that activates a metal detector, as one example. Obviously, to be able to handle the vast data sets, geo coordinate, and apply to satellite or other remote sensed data may require the power of the cloud, so we are focused on a hybrid-cloud model: some processing on prem, some in the cloud. It really depends on the criticality of the data analysis, alarms, and data volume. More on these specific deployment design patterns in another conversation. We have many cloud and stack partners here and want to enable all of them as they all have different strengths, services, AI capabilities, and costs.

Last, perhaps the most varying technology space is at the top of the stack; the visualization and user/operator interface. Interestingly, it's critical to tie this layer to the type of sensors and the data they produce as this is the layer that the user finally sees the data and insights from the entire deployment and is trying to get value from all these techno tools.

Let me give a bit more color on the basics of this architecture. In this 4-layered approach we describe the layers from bottom to top:

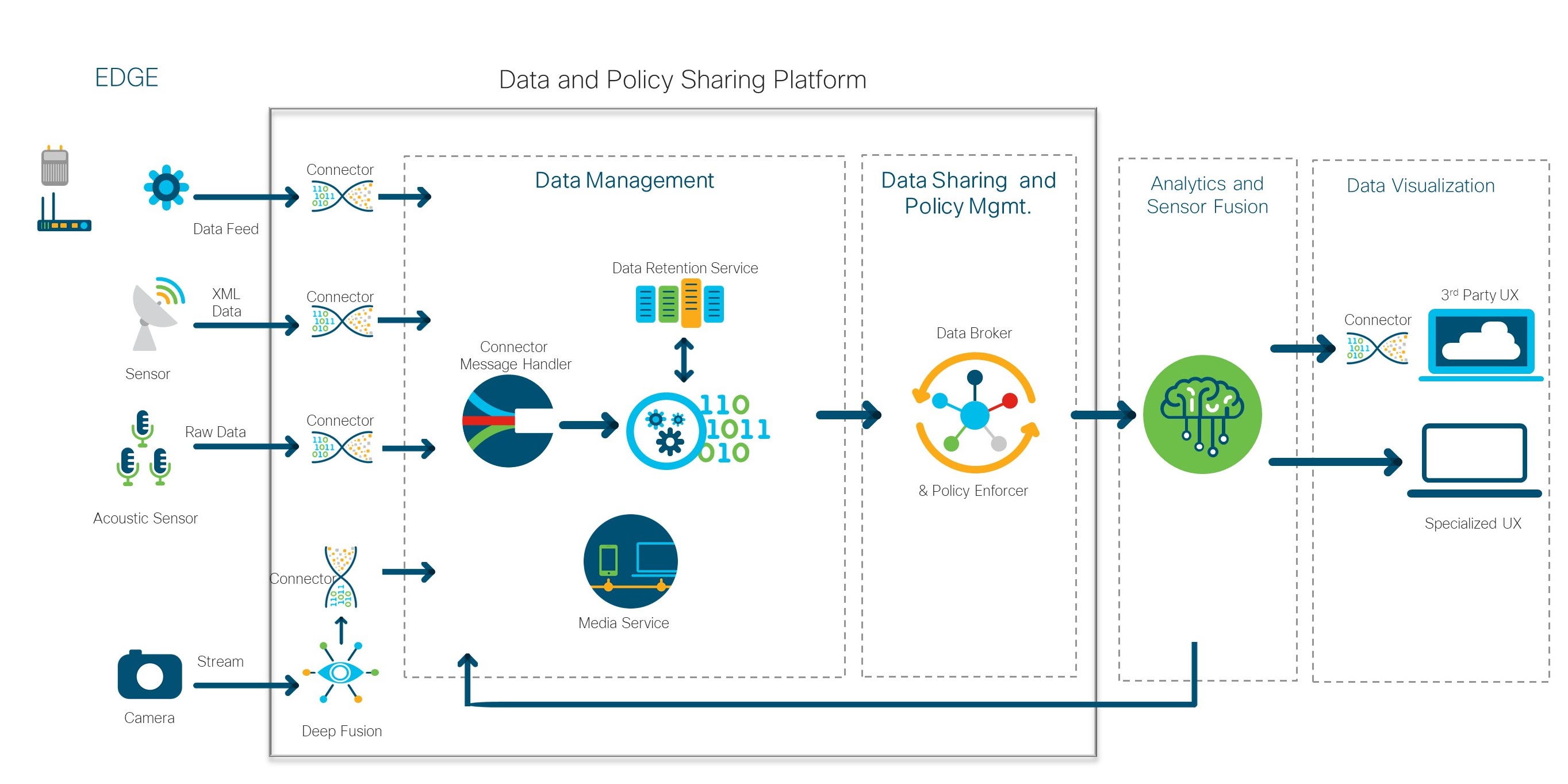

As an aside, while edge (or distributed) analytics can be performed on individual data sources before it reaches the data sharing module, such as for example Deep Fusion video analytics, Sensor Fusion requires multiple data sources and a data fabric; thus, the hybrid cloud architecture.

Also, the operations and management areas, including packaging, configuration, telemetry, and assurance, is an integral part of this stack. We have to monitor all the technology, make sure we know what's working, recover from failures/storms/destruction, and support the installation.

Overall, the creation of this architecture follows several design guiding principles. Two important ones are the reusability and scale of the single architecture across many dimensions, which results in several realizations of the model across ecosystems and verticals, across species protected and types of entities, and across use cases. The goal is to enable the entire ecosystem of entrepreneurs, coders, AI folks, solution and system integrators, UX and portal specialists, all to be able to fit their pieces into the full puzzle without having any one layer dictate to the others. In particular, we are trying to avoid the case where every single person involved from conservationist to ranger to technologist needs to understand each and every element in the entire architecture. We can all focus on what we specialize in and yet, still work together through the layers of integration.

So, what make a conservation architecture and solution unique? Why isn't this just "take what's on the shelf and deploy"? If it works in Silicon Valley, of course it'll work everywhere else... (Refer back to the seagull comment in the introduction...)

The difficult terrain, remote and vast geographies, and extreme environmental conditions compound the challenges of deploying technology on diverse ecosystems. A design needs to consider thick spider webs and playful monkeys who enjoy climbing and playing with cameras, as well as lack of pervasive telecommunications infrastructure and limited staff.

As an engineer, it's very satisfying to see technology deployed that is a tool to help successfully solve a problem. Considering both the major deployment challenges as well as the positive impact on the world of these systems, the technologies invented and deployed by the Sustainable Impact team demonstrates to us that Cisco innovation can make a difference. For example, one of our deployments in South Africa got some recognition for innovation at the Better Society Awards this year, and Cisco & NTT Ltd. were named for Connected Conservation to Fortune's Change the World List.

Below are some examples of a few specific innovative technologies we've worked on:

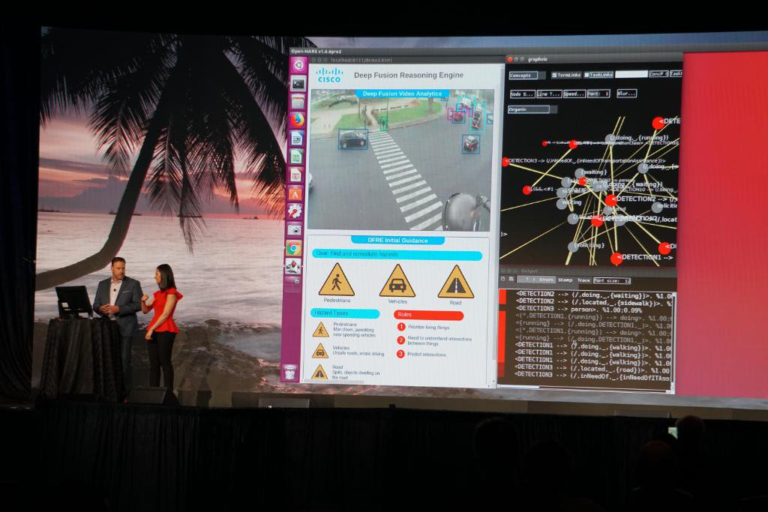

Deep Fusion

This advanced video analytics technology is the winner of the Cisco 2019 Data Science Award, in the category of Benefiting Humanity. The deployment of Cisco Deep Fusion (DF) video analytics uses Machine Learning to alert rangers of potential threats and encroachments. The DF system leverages Deep Learning-powered Video Analytics to identify vehicles, humans, weapons, and animals on thermal camera feeds and provides visual and auditory alerts, instead of having the rangers operations continuously monitor several cameras. Deep Fusion is even optimized for small object (a few pixels) models. What we mean here is that we can detect humans at great distances when in camera terms, you have less than 10 pixels. We work in multiple spectra and on fixed location, fixed wing and drone platforms. This technology provides pro-active monitoring, freeing up CCTV operators and allowing rangers to scale, while the system makes thousands of decisions and reports the ones requiring human intervention. It's a great use of compute platforms and advances in imaging to not only relieve rangers, but help them do their job better and safer.

Cisco's Ruba Borno (read her blog) and Sean Curtis at Partner Connections Week 2019.

Cisco's Ruba Borno (read her blog) and Sean Curtis at Partner Connections Week 2019.Data and Policy Sharing Platform

The deployment of a data authorization platform is optimized for environments with many diverse sensor inputs, and many potential consumers/rangers/operators with different access policies. Since many sensors provide video, audio, and other rich media data, the data sharing platform includes key data retention and media management services. The sensitivity of the data in this system is extremely high. Access to this data may give a poacher the exact coordinates of a herd. Therefore, data segmentation and access control is vital to make certain critical data doesn't get into the wrong hands. Having this data platform enables pre-emptive data access, authorization and decision making of how, where and when to apply AI algorithms.

The deployment of a microphone array acoustic sensor integrated into the data layer provides a diverse data input to drastically improve detection of gunshots or animal behavior. Acoustic and other waveform data is quite challenging to process and requires heavy use of AI to be able to determine what we are hearing and translate that into behavior or critical activities for a ranger or conservationist.

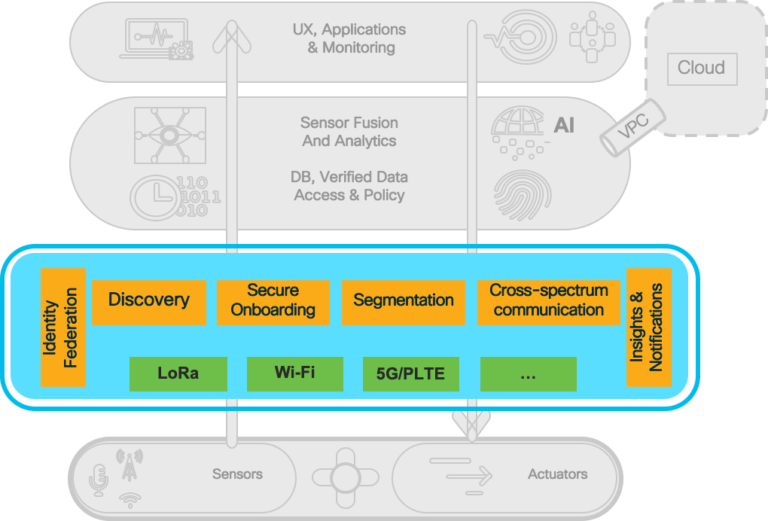

In the Architectural description above we introduced the concept of AnyNet for conservation and to solve Internet Architectural problems. Complex environments such as large-scale IoT deployments in enterprises and industries show that wireless technologies such as Wi-Fi, CBRS, LPWAN, UWB, BlueTooth and 5G / Private LTE will need to coexist as there is no one-size-fits-all technology for sensor, device or human access to the Internet. As stated earlier, being limited by internet access technology to determine services and security to be delivered is very limiting and ultimately requires the construction of complete replication of operations, services, security for each network independently. Further, publishing fragmented data to the data lake or cloud and solely relying on application and data level effort for integration and cross-communication creates new challenges in terms of trust, security and efficiency, and does not apply to all use-cases. There are therefore more optimal solutions that unifies the access technologies, security of the network, data and applications.

Simplifying what I'm trying stay, a conservation environment shares many of the same requirements as other industries and has the same difficulties when it comes to securely and seamlessly connecting and identifying both things, animals and people roaming around. Imagine how to connect and make the best use of data collected from LoRA-based wildlife collars, rangers P-LTE devices, a Wi-Fi hotspot from a ranger's vehicle, and park visitors smartphones connected to the guest Wi-Fi. It would be a nightmare to manage these networks independently. Also, mobile devices have both WiFi and cellular abilities, so let's use both radios at the same time. Collars, sensors and cameras can have multiple types of radios; let's use them both!

With this heterogeneous future and more and more connected objects "on the move", such environments need to not only onboard different types of devices (i.e., things) but also segment and securely control their communications. This requires overcoming a number of challenges, including normalizing and coalescing the identity and roaming capabilities in IoT.

This is where this conservation architecture and its AnyNet capabilities can make a difference by enabling advanced mobility of things and people across diverse networks and spectrum in a secure way. By extending the existing OpenRoaming Identity Federation (another innovation we've created) to IoT and building the capacity to discover, identify, and control the communications for roaming assets -covering both indoor and outdoor scenarios -such an architecture can provide trusted insights in real-time irrespective of the over-the-air access technology and spectrum used.

Overall, our goal is to create a multi-access Internet. Where a ranger or conservationist doesn't have to worry about the types of radios and we can help get everything on the Internet. We can also help rangers and solution partners by enabling the ability to unify the different radios or wired environment as being "one network." So, the Any in AnyNet is Any and all access technologies operated and managed as one Network.

A hybrid cloud is a computing environment that combines and bounds together a public cloud and a private environment, either on-premise data centers or, private cloud resources. This allows workloads and applications to be shared seamlessly between what's on prem and what can be done in the cloud. This allows a JOCC or conservationist to be able to cost effectively manage their data and analytics/AI processing while still being able to handle critical, real-time processing or when an uplink fails; locally. There are a number of use cases which are enabled via hybrid cloud deployments. For example, AI may not be able to run locally given the type of equipment installed (e.g., expensive GPUs), or satellite images may be more cost effectively stored in the cloud. At the same time, locally processed camera data with technology like Deep Fusion is best processed locally for real-time alerts and avoiding expensive uplink costs and bandwidth. Extending and merging the capabilities and strengths of each cloud environment, we can provide optimized placing to realize use cases. Specifically, enhanced capabilities enabled for Techno-conservation:

In addition to flexibility and self-service functionality, the key advantage to cloud bursting is economical savings. Users only pay for the additional resources when there is a bursting demand for those resources. A hybrid cloud architecture also provides increased availability and accessibility with built-in redundancy and replication to our most critical data.

All of these enhanced deployment capabilities enable three flexible solutions vital for ecosystem techno-conservation environments:

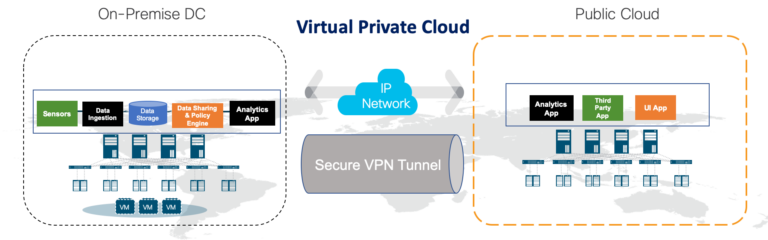

On the flipside, working with a hybrid cloud deployment and runtime environment, security can never be forgotten. Conservation, ecology data is extremely sensitive data on very sensitive populations of critical species. Many poachers and those that help poachers want precisely this data. Securing all endpoints in the network, segmentation for every endpoint, securing IPsec VPN tunnel between the public and the private cloud are some of the considerations to have end-to-end security and visibility across the network. This functionality is also how you guarantee bandwith amongst sensors (video vs. acoustic vs. animal sensors), which is another critical engineering exercise. The goal overall is to have on-prem and cloud resources deployed, operated and managed with the exact same security, services, as if they were side-by-side and one network.

The following diagram depicts details of a Virtual Public Cloud hybrid realization of the techno-conservation data sharing platform:

The topic of Techno-Conservation was central to the Sustainable Impact program at CiscoLive US, June 2019, in San Diego. It was described in the session titled "The Network in IoT: From Preventing Poaching to Parking Your Car", as well as throughout the Open Conservation theater presentations at the Cisco

Innovation Network booth. Open Conservation was also featured as one of Cisco's key innovations showcased at the Grace Hopper Celebration 2019, where we encouraged students, post-grads and women from across the technology industry to pursue careers in techno-conservation and sustainability. Using virtual reality, we were able to give attendees a look at the work we are doing in South Africa, and an up-close experience with the rhinos that we are working to save.

In 2020 the Open Conservation program will be expanding outside of Africa for the first time and pursuing projects across South East Asia and India. We will be looking into a number of use cases beyond poaching and focus on factors such as flooding and human-wildlife conflict which are equally responsible for the rapid decline of animal populations. We are now working in new and different ecosystems, species and communities of people, rangers, academics and conservationists. The goal is to really have a Sustainable Impact on our planet and population of people and wildlife.

We are going to take the integration layers in the architecture and direction we talked about above to larger communities by creating an Open Conservation open source community. We want to be able to document use-cases, deployment architectures, as well as ... in the open ... enable coders to develop everything from sensor connectors, data processing, analytics/AI algorithms and operational/visualization portals. The goal is to bring the Techno-Conservation community together and enable a vibrant community of entrepreneurs, academics, conservationists, ecologists and technologists. There are many roles and room for people of all different backgrounds to save species, ecosystems and the planet. Another way in which we have opened Requests for Proposals (RFPs) for Global Conservation in our Cisco Research Center (CRC), for "Sustainable Economic Development" and "Science and Technology Meet for Species Conservation".

Cisco is dedicated to solving the toughest problems affecting the environment and endangering species, but we can't do it alone. Succeeding in this effort requires the creativity, collaboration and cooperation of a network of partners and contributors. Techno-conservation starts with learning more and getting involved in any way that you can. Continue following the progress that Cisco is making in applying technology towards solving the biggest challenges affecting our planet and all its inhabitants.

Горячие метки:

3. Инновации

Internet of Things (IoT)

Artificial Intelligence (AI)

B. устойчивое развитие

partners

Cisco Partner Ecosystem

Подключение к интернету

#ServiceProviders

Conservation

Горячие метки:

3. Инновации

Internet of Things (IoT)

Artificial Intelligence (AI)

B. устойчивое развитие

partners

Cisco Partner Ecosystem

Подключение к интернету

#ServiceProviders

Conservation