Snorkel's Data-centric Foundation Model Development suite will let a non-programmer use a text prompt with natural language queries to get a neural net to automatically label data. The output labels become a means to supervise the training of a downstream machine learning program, which might be a simple classifier.

SnorkelIn the last big upsurge in artificial intelligence, in the late '70s and '80s, a popular approach took hold known as expert systems, programs that contained rules for tasks based on human knowledge typed into the computer.

Expert systems ultimately failed because they both proved too hard to codify -- what can an expert truly articulate about what they know? -- and also too arduous to build and maintain. They didn'tscale, in other words.

In an intriguing twist on that earlier approach, Snorkel, a three-year-old AI startup based in San Francisco, Wednesday unveiled tools to, as they put it, bring the human domain expert back into the driver's seat when it comes to developing neural networks.

Snorkel's Data-centric Foundation Model Development, as the offering is called, is an enhancement to the startup's flagship Snorkel Flow program. The new features let companies write functions that automatically create labeled training data by using what are called foundation models, the largest neural nets that exist, such as OpenAI's GPT-3.

The new functions in Snorkel Flow let a person who is a domain expert but not a programmer create a workflow that will then automatically generate labeled data sets that can be used to train the foundation programs for specific tasks.

"We're excited about up-skilling and empowering the non-developer, the subject matter expert, to be able to drive more of the process" of making AI, Snorkel co-founder and CEO Alex Ratner told in an interview via zoom.

"These are the people that have the domain knowledge, and they're often, kind-of, put in a silo around just labeling data by hand," said Ratner. "We want them to sit where they should, where we think they need to, in the driver's seat, co-piloting with the data scientist or leading on their own."

Also:Is Google's Snorkel DryBell the future of enterprise data management?

Foundation models such as GPT-3, and also OpenAI's Dall?E 2 and Google's RoBERTa, are increasingly in vogue because of their ability to generate text and images which can then be applied to a wide range of corporate tasks, from automated customer service bots to creating corporate documentation to creating stock photography.

The foundation models are data hogs. GPT-3 was trained by Open-AI in 2020 using the popular CommonCrawl dataset of Web pages from 2016 to 2019, 45TB worth of compressed text data. OpenAI had to curate that down to a manageable 570GB of data. To train such models is prohibitive for most companies.

Some of the foundation models, such as GPT-3, can be accessed as cloud services, which eases some of the burden for enterprises that wish to use them. A company can just rent the models and do some tweaking to suit their use case.

But in order to productively use a foundation model, a corporation still has to adjust the functioning of the model to the specific use cases, such as legal or marketing, what's known as "fine tuning" a model. And capturing the domain knowledge necessary can require tens of gigabytes of new data, with appropriate labels, meaning, hints for the program.

"There's there's always this kind of problem of disillusionment" with deep learning AI among enterprises, said Ratner, "and a sense that, Hey, we've put so much down on the AI, there's so much out there in the open-source world, there's so much inside our enterprise organization, why aren't we moving faster?"

The reason companies aren't moving faster often has to do with that pile of data, he said.

Also:'Weird new things are happening in software,' says Stanford AI professor Chris Re

"It's always been, What about the data?" said Ratner. And in particular, the labeled training data that these models rely on, and still rely on even in the foundation model era, to be adapted and fine-tuned for specific use cases, especially complex high-performance ones in the enterprise that we see with our customers."

Snorkel's Snorkel Flow software is a commercial effort that grew out of open-source academic research at Stanford University's AI Lab starting in 2015 undertaken by Ratner and fellow researchers. That work lead to a program co-developed with Google researchers, Snorkel Drybell, which Ratner said had a "massive impact" within Google.

The Drybell program offered a way to build rules that would then automatically generate labels for data, thereby saving enormous manual effort for a person to label each and every piece of data.

Along the way, Ratner observed that it was really hard to implement a rules engine.

"It took six to nine months to get the workflow around it, to get it deployed," Ratner recalled of the Drybell program. "That was a lightbulb moment," he said.

Also:Is Google's Snorkel DryBell the future of enterprise data management?

Drybell, he said, was "one of several examples that showed what the potential for impact in the real world, but also how much engineering and prioritization work had to had to bridge that gap, which was a big motivation for finally spinning out the company in 2019."

There might need to be a commercial package to help companies use the approach.

Ratner, and his thesis advisor, Christopher Re, and three other colleagues went on to form the company in 2019 with venture capital from prominent venture firms such as Greylock Partners and In-Q-Tel.

Re is also the founder, along with another advisor to Ratner, Stanford computer science professor Kunle Olukotun, of AI hardware startup SambaNov Systems.

With the new Model Development capability, Snorkel takes the problem of very data-hungry programs and turns it on its head. Rather than come up with training data for such massive programs as GPT-3, the generative function of those models can be used to produce training data for a smaller neural network, or even for forms of AI that have nothing to do with neural nets.

"We started experimenting," said Ratner, "with this idea of rather than trying to find a foundation model and plug it into production, use the foundation model to auto-label data to accelerate the data-centric development loop, and then look for a different deployable model."

Also:'We are the best-funded AI startup,' says SambaNova co-founder Olukotun following SoftBank, Intel infusion

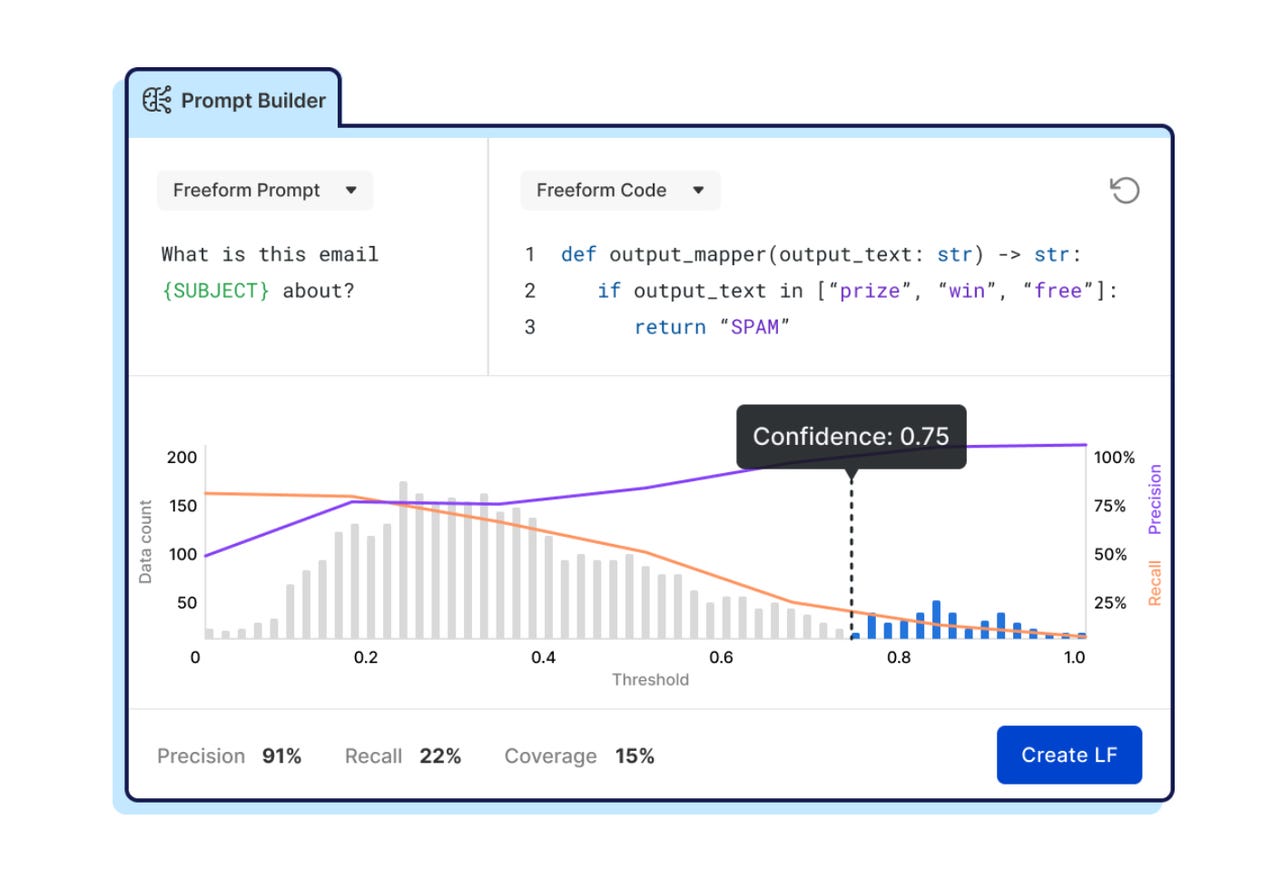

The Model Development suite has three components that can be used independently or in combination. One is to use the text prompt with natural language queries that will prompt a foundation model to generate data labels as output.

"How do you marry the expert system knowledge that you can express through a rule or a heuristic, or now a question in a prompt, with the massive kind of generalization capabilities of these statistical models?" is how Snorkel thinks about getting human beings back in the loop as subject-matter experts, explains Snorkel's co-founder and CEO, Alex Ratner.

SnorkelThose natural language queries can be something that a domain expert knows how to formulate, such as "Do these contract documents pertain to trademark issues?" That query then becomes a way to get the program to apply labels as to whether a document is or is not a trademark-related document. The total output then become labels to train a classifier to sort documents as trademark-related or not related.

The second option with the Model Development tools is what's called "warm start." A foundation model's ability to automatically generate output when shown zero or a few examples - zero-shot and few-shot learning - become a mechanism by which to automatically generate data labels.

And, the third option, the foundation models themselves can be used by applying a smaller data set that simply "fine-tunes" the large foundation model.

The idea behind all three approaches is that foundation models themselves are "foundations to build on, but they don't magically solve all of your real world problems."

"You still have to build on top of them. You have to fine tune about them, figure out how to deploy them," said Ratner.

"We're embedding foundation models in a number of ways into that workflow to accelerate it and and basically bridge the gap."

Some customers have been working with the Model Development function for months now and have seen their time required to build machine learning programs dramatically reduced.

Also: AI challenger Cerebras assembles modular supercomputer 'Andromeda' to speed up large language models

Ratner recalled the example of a customer that is a top five bank that wanted to train an AI program to extract things from bank documents pertaining to anti-money laundering concerns and Know Your Customer.

That task involved scanning PDFs, multi-hundred-page documents, "very complex, very bespoke," said Ratner, and also "very critical, for regulatory reasons, for the bank to get these extractions correct."

"If you tried to construct a labeled training set by hand to train ML to do those extractions, it would have been months of expert legal time" by a human domain area specialist. The Snorkel Flow software was able to cut that to mere weeks, said Ratner.

"The really exciting result with this foundation model capability is actually taking that down to hours or days by using foundation models to warm-start and then auto-label the data."

Snorkel Flow is about getting all kinds of sources of data into a cycle of updating labels automatically, to constantly improve the labeled data available to try AI models.

SnorkelThe Model Development capability points to a fundamental truth about deep learning, said Ratner: It's now more about the data than about the particular neural network architecture, at least as measured by where the greatest amount of labor is expended.

"AI is becoming more data-centric versus model centric in terms of what is the average iteration a data scientist does to get something to work," he added. "Data scientists are actually doing a lot of their work trying to label related to improving and iterating on that data" rather than revising models.

Customers of Snorkel had previously had to spend six months or more labeling data by hand before they could run a single program, notes Ratner.

Using the Model Development suite requires no knowledge about foundation models by the person running the labeling task. If one happens to be interested in the details of the foundation models, however, "we have some of the goodies under the hood," he noted, such as the ability to write custom prompts or prompt templates for the foundation models.

By flipping the paradigm, foundation models can be a more-efficient aid to smaller, less-demanding models that perform better, ultimately.

By using the general capabilities of foundation models, it's possible to "speed up the training of these specialist deployment models that go in your existing and ops infrastructure," said Ratner. "You can actually get models that are 10,000 times as small as a big foundation model but are more accurate at the target task."

The final program might be a simple regression model or XG Boost or another kind of approach said Ratner. Such models have the added virtue that they are not only easier to run in production, and can be more accurate than generic pre-trained programs such as GPT-3, but they often, too, are much more interpretable and manageable than the proverbial black box of large neural nets.

"These kinds of use cases often have stricter governance around them," said Ratner of enterprise applications such as Know Your Customer.

Ratner believes the Model Development function in some ways makes good on the old expert system approach of putting the human subject-matter expert back in a central role.

"How do you marry the expert system knowledge that you can express through a rule or a heuristic, or now a question in a prompt, with the massive kind of generalization capabilities of these statistical models?" Is one way to think about it.

"Don't ask your subject-matter expert to just kind of click on 10,000 contracts like you're playing 20,000 questions," he suggested. "Have them write down their domain knowledge - again, previously, we called that a labeling function, now we offer it in the form of a prompt, which is another type of labeling function."

That human effort is used "to bootstrap and train a model that can generalize to the long tail of patterns that modern human models are so good at soaking up and recognizing."

Горячие метки:

Искусственный интеллект

3. Инновации

Горячие метки:

Искусственный интеллект

3. Инновации