For lack of rich competition, some of Nvidia's most significant results in the latest MLPerf were against itself, comparing its newest GPU, H100 "Hopper," to its existing product, the A100.

NvidiaAlthough chip giant Nvidia tends to cast a long shadow over the world of artificial intelligence, its ability to simply drive competition out of the market may be increasing, if the latest benchmark test results are any indication.

On Wednesday, the MLCommons, the industry consortium that oversees a popular test of machine learning performance, MLPerf, released the latest numbers for the "training" of artificial neural nets.

The bake-off showed the least number of competitors that Nvidia has had in three years, just one: CPU giant Intel.

In past rounds, including the most recent in June, Nvidia had two or more competitors it was going up against, including Intel, Google with its "Tensor Processing Unit," or TPU chip, and chips from British startup Graphcore. And in rounds past, China's telecom giant Huawei.

Also: Intel unveils new chips that will power the upcoming Aurora supercomputer

For lack of competition, Nvidia this time around swept all the top scores, whereas in June, the company shared top status with Google. Nvidia submitted systems using its A100 GPU that has been out for several years, and also its brand-new H100, known as the "Hopper" GPU, in honor of computing pioneer Grace Hopper. The H100 took the top score in one of eight benchmark tests for so-called recommendation systems that are commonly used to suggest products to people on the Web.

Intel offered two systems using its Habana Gaudi2 chips, and also systems labeled "preview" that showed off its forthcoming Xeon server chip code-named Sapphire Rapids.

The Intel systems proved much slower than the Nvidia parts.

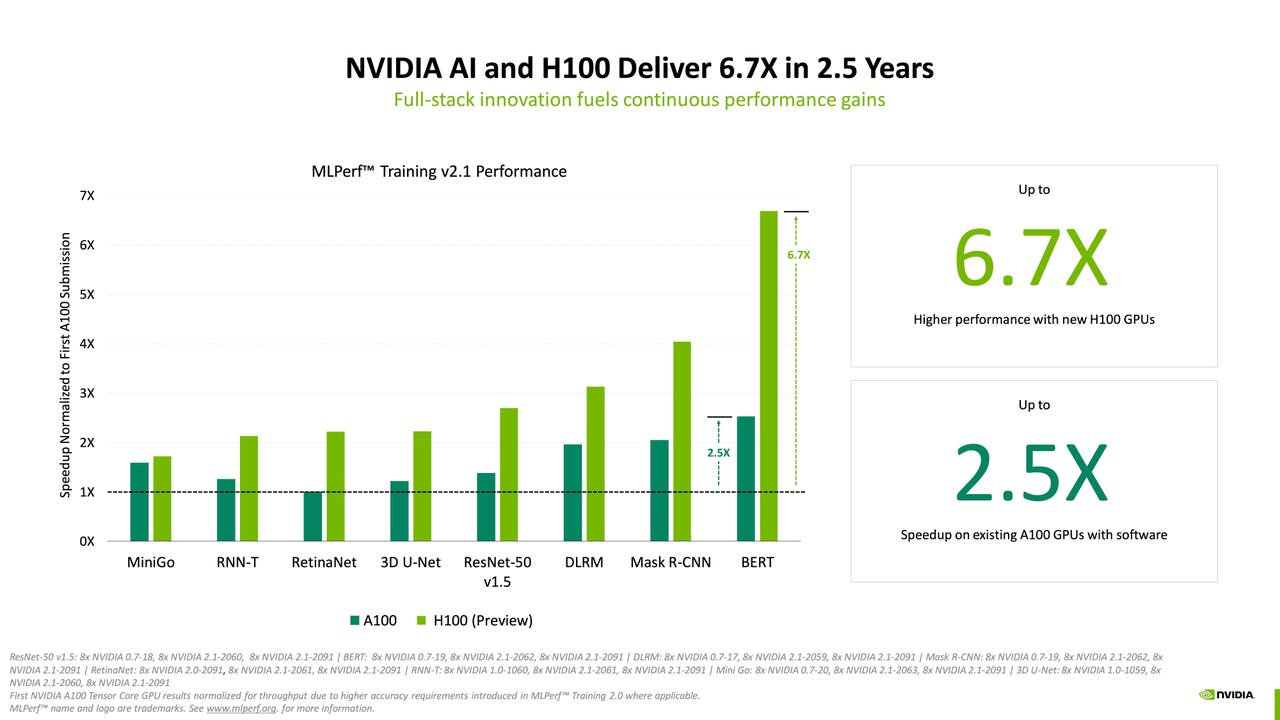

Nvidia said in a press release, "H100 GPUs (aka Hopper) set world records training models in all eight MLPerf enterprise workloads. They delivered up to 6.7x more performance than previous-generation GPUs when they were first submitted on MLPerf training. By the same comparison, today's A100 GPUs pack 2.5x more muscle, thanks to advances in software."

During a formal press conference, Nvidia's Dave Salvator, senior product manager for AI and cloud, focused on the performance improvements of Hopper and software tweaks to A100. Salvatore showed both how Hopper speeds up performance relative to A100 -- a test of Nvidia against Nvidia, in other words -- and also showed how Hopper was able to trample both the Intel Gaudi2 chips and Sapphire Rapids.

Also: Nvidia CEO Jensen Huang announces 'Hooper' GPU availability, cloud service for large AI language models

The absence of different vendors does not in itself signal a trend given that in past rounds of MLPerf, individual vendors have decided to skip the competition only to return in a subsequent round.

Google did not respond to a request for comment as to why it did not participate this time.

In an email, Graphcore told that the company had decided it may for the moment have better places to devote its engineers' time than the weeks or months it takes to prepare submissions for MLPerf.

"The issue of diminishing returns came up," Graphcore's head of communications, Iain Mackenzie, told via email, "In the sense that there will be an inevitable leap-frogging ad infinitum, further seconds shaved, ever-larger system configurations put forward."

Graphcore "may participate in future MLPerf rounds, but right now it doesn't reflect the areas of AI where we're seeing most exciting progress," Mackenzie told . MLPerf tasks are merely "table stakes."

Instead, he said, "We want really to focus our energies" on "unlocking new capabilities for AI practitioners." To that end, "You can expect to see some exciting progress soon" from Graphcore, said Mackenzie, "For example in model sparsification, as well as with GNNs," or Graph Neural Networks.

In addition to Nvidia's chips dominating the competition, all the computer systems that achieved top scores were those built by Nvidia rather than those from partners. That is also a change from past rounds of the benchmark test. Usually, some vendors, such as Dell, will achieve top marks for systems they put together using Nvidia chips. This time around, no systems vendor was able to beat Nvidia in Nvidia's own use of its chips.

The MLPerf training benchmark tests report how many minutes it takes to tune the neural "weights," or parameters, until the computer program achieves a required minimum accuracy on a given task, a process referred to as "training" a neural network, where a shorter amount of time is better.

Also: Benchmark test of AI's performance, MLPerf, continues to gain adherents

Although top scores often grab headlines -- and are emphasized to the press by vendors -- in reality, the MLPerf results include a wide variety of systems and a wide range of scores, not just a single top score.

In a conversation by phone, the MLCommons's executive director, David Kanter, told not to focus only on the top scores. Kanter said the value of the benchmark suite for companies that are evaluating purchasing AI hardware is to have a broad set of systems of various sizes with various types of performance.

The submissions, which number in the hundreds, range from machines with only a couple of ordinary microprocessors on up to machines that have thousands of host processors from AMD and thousands of Nvidia GPUs, the kind of systems that achieve the top scores.

"When it comes to ML training and inference, there's a wide variety of needs for all different levels of performance," Kanter told , "And part of the goal is to provide measures of performance that can be used at all of those different scales."

Kanter continued, "There is as much value in information about some of the smaller systems as in the larger-scale systems ... All of these systems are equally relevant and important but perhaps to different people."

As for the lack of participation by Graphcore and Google this time around, Kanter said, "I would love to see more submissions," also adding, "I understand for many companies, they may have to choose how they invest engineering resources. ... I think you'll see these things ebb and flow over time in different rounds" of the benchmark.

An interesting secondary effect of the paucity of competition to Nvidia meant that some top scores for some training tasks not only showed no improvement from the prior time but rather a regression.

For example, in the venerable ImageNet task, where a neural network is trained to assign a classifier label to millions of images, the top result this time around was the same result that had been third-place in June, an Nvidia-built system that took 19 seconds to train. That result in June had trailed results from Google's "TPU" chip, which came in at just 11.5 seconds and 14 seconds.

Asked about the repeat of an earlier submission, Nvidia told in email that its focus is on the H100 chip this time around, not the A100. Nvidia also noted there has been progress since the very first A100 results back in 2018. In that round of training benchmarks, an eight-way Nvidia system took almost 40 minutes to train ResNet-50. In this week's results, that time had been cut to under 30 minutes.

Nvidia also talked up its speed advantage versus Intel's Gaudi2 AI chips and forthcoming Sapphire Rapids XEON processor.

NvidiaAsked about the dearth of competitive submissions and the viability of MLPerf, Nvidia's Salvatore told reporters, "That's a fair question," adding, "We are doing everything we can to encourage participation; industry benchmarks thrive on participation. It is our hope that as some of the new solutions continue to come to market from others, that they will want to show off the benefits and the goodness of those solutions in an industry-standard benchmark as opposed to offering their own one-off performance claims, which are very hard to verify."

A key element of MLPerf, said Salvatore, is to rigorously publish the test setup and code to keep test results clear and consistent across the many hundreds of submissions from dozens of companies.

Alongside the MLPerf training benchmark scores, Wednesday's release by MLCommons also offered test results for HPC, meaning, scientific computing and supercomputers. Those submissions included a mix of systems from Nvidia and partners and also Fujitsu's Fugaku supercomputer that runs its own chips.

Also:Neural Magic's sparsity, Nvidia's Hopper, and Alibaba's network among firsts in latest MLPerf AI benchmarks

A third competition, called TinyML, measures how well low-power and embedded chips do when performing inference, the part of machine learning where a trained neural net makes predictions.

That competition, in which Nvidia so far has not participated, has an interesting diversity of chips and submissions from vendors, such as chip makers Silicon Labs and Qualcomm, European technology giant STMicroelectronics, and startups OctoML, Syntiant, and GreenWaves Technologies.

In one test of TinyML, an image recognition test using the CIFAR data set and the ResNet neural net, GreenWaves (which is headquartered in Grenoble, France), took the top score for having the lowest latency to process the data and come up with a prediction. The company submitted its Gap9 AI accelerator in combination with a RISC processor.

In prepared remarks, GreenWaves stated that Gap9 "delivers extraordinarily low energy consumption on medium-complexity neural networks, such as the MobileNet series in both classification and detection tasks, but also on complex, mixed precision recurrent neural networks, such as our LSTM based audio denoiser."

Горячие метки:

Искусственный интеллект

3. Инновации

Горячие метки:

Искусственный интеллект

3. Инновации