Can you see it? The end is nigh! The end of this blog series, not necessarily "the end" as in AMC's the Walking Dead sort of end. Are you Zombie stumbling across this blog from a random Google search? Here is a table of contents to help you on your journey as we once again delve into the depths and address another question on our quest to answer... The VDI questions you didn't ask, but really should have.

You are Invited! If you've been enjoying our blog series, please join us for a free webinar discussing the VDI Missing Questions, with Tony, Doron, Shawn and Jason! Access the webinar here!

Got RAM?VDI is an interesting beast both from a physical perspective as well as the care and feeding of it. One thing this beast certainly does like is RAM (and braaaiiiins). Just in case I am still being stalked by that tech writer, RAM stands for Random Access Memory. I spoke a bit about Operating Systems in our 5thquestion in this series, and this somewhat builds upon that in regards to the amount of memory you should use. Microsoft says Windows 7 needs:

1 gigabyte (GB) RAM (32-bit) or 2 GB RAM (64-bit). For the purpose of our testing, we went smack in the middle with 1.5GB of RAM. Does it really matter what we used for this testing? It does a little -one, we need to have sufficient resources for the desktop to perform the functions of the workload test, and second, we need to pre-establish some boundaries to measure from.

Calculating overhead. In order to properly account for memory usage, we need to take into account the overhead of certain things in the Hypervisor. If you want to learn more about calculating overhead, click here. Here are a couple of things we are figuring in overhead for:

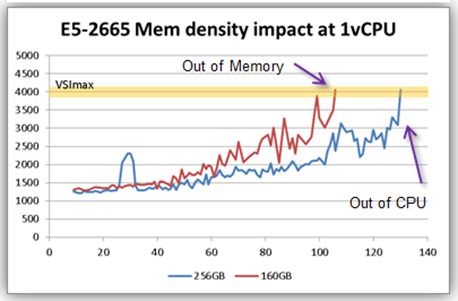

Numbers!In our testing we found that the E5-2665 B200 M3 could support 130 x 1vCPU desktops with 1.5GB each before it ran out of CPU and not memory. If we factor in the overhead, we can confirm this by this simple calculation: 200MB (ESXi) + 130 Desktops x (1.5GB + 29MB)= 203,650MB or 198.89GB of potential consumed memory. On our test with 256GB of RAM running at 1600MHz, we were nowhere near running out of memory space -CPU bottleneck confirmed.

So what happens if we physically limit the amount of memory and run the same test? Will magical free memory from all of the VMware overcommit goodness come into play and save the day? Will we be able to get back up to 130 Desktops? I mean, we've already proven the blade configuration is capable of doing 130 Virtual Desktops, the big question: Is there enough magic in VMware memory technology to get us back there?

From our previous test and calculations we know that this system was CPU bottle necked at 130 Virtual Desktops. We decided to put 160GB of memory in the server running at the same speed as we knew we would be enforcing a RAM bottleneck at 104 Virtual Desktops. Following the same calculations we should be able to run 104 Virtual Desktops before running out of RAM. The supporting calculations are 200MB (ESXi) + 104 Desktops x (1.5GB + 29MB) = 162,960MB or 159.14GB -but again, the blade should be able to support 130 desktops (from a CPU perspective). So how did that affect scalability?

Where's my magic?!We got 106 Virtual Desktops before the system ran out of steam. This is fairly close to our calculation of 104 with overhead, etc.. But I know what you're thinking, the system ran out of steam? You mean the system tanked? YES! Slightly past the point that we ran out of physical memory, the system as a whole was done for. To be fair, the staging of this and all of our tests started with a fresh boot up of the desktops, a few minutes for utilization to level out, then the LoginVSI test run. This doesn't give the hypervisor ample time to come in reclaim additional memory and potentially fire up additional desktops. Could followup testing explore this more? It might just happen (hint). Even without the time to reclaim, you can see the 256GB configuration had a slightly lower latency across the board. In a real world boot storm sort of situation -can we tell the other 24 VDI users to wait a couple hours for VMware to reclaim memory and then we'll get right on starting up their desktops? I doubt that would go over very well .

Answer:

Memory is often considered a "capacity" piece of the VDI puzzle. Overcommit can help stretch limited memory resources, but tests show better performance, improvements in overall usability and lower response times when the hypervisor has sufficient physical memory to scale as workloads increase. The bottom line is that you can severely limit your servers capability by hoping that memory saving technologies will allow for more Virtual Desktops. Trying to save money by skimping on RAM, you might actually end up with slow, stumbling, ZDI (Zombie Desktop Infrastructure).

What's next?Jason wraps up the this blog series with some storage observations when he addresses Virtual Desktop IOP requirements.

Горячие метки:

Cisco UCS

Virtual Desktop Infrastructure (VDI)

VMware View

Горячие метки:

Cisco UCS

Virtual Desktop Infrastructure (VDI)

VMware View