One of the hottest debates in generative artificial intelligence (AI) is open source versus closed source: which will prove more valuable?

On one side, a plethora of open-source large language models (LLMs) are constantly being produced by an ever-evolving constellation of contributors, led by the most prestigious open-source model to date, Meta's Llama 2. Representing closed-source LLMs are the two most well-established commercial programs, OpenAI's GPT-4 and the venture-backed startup Anthropic's language model, which is known as Claude 2.

Also:I'm taking AI image courses for free on Udemy with this little trick - and you can too

One way to test these programs against each other is to see how well they perform answering questions on a specific area, such as, for example, medical knowledge.

On that basis, Llama 2 is terrible at answering questions in the area of nephrology, the science of the kidneys, according to a recent study by scientists at Pepperdine University, University of California at Los Angeles, and UC Riverside, which was published this week in NEJM AI, a new journal published by the prestigious New England Journal of Medicine.

Also: The best AI chatbots: ChatGPT and other noteworthy alternatives

"In comparison with GPT-4 and Claude 2, the open-source models performed poorly in terms of total correct answers and the quality of their explanations," write lead author Sean Wu of Pepperdine's Keck Data Science Institute, and colleagues.

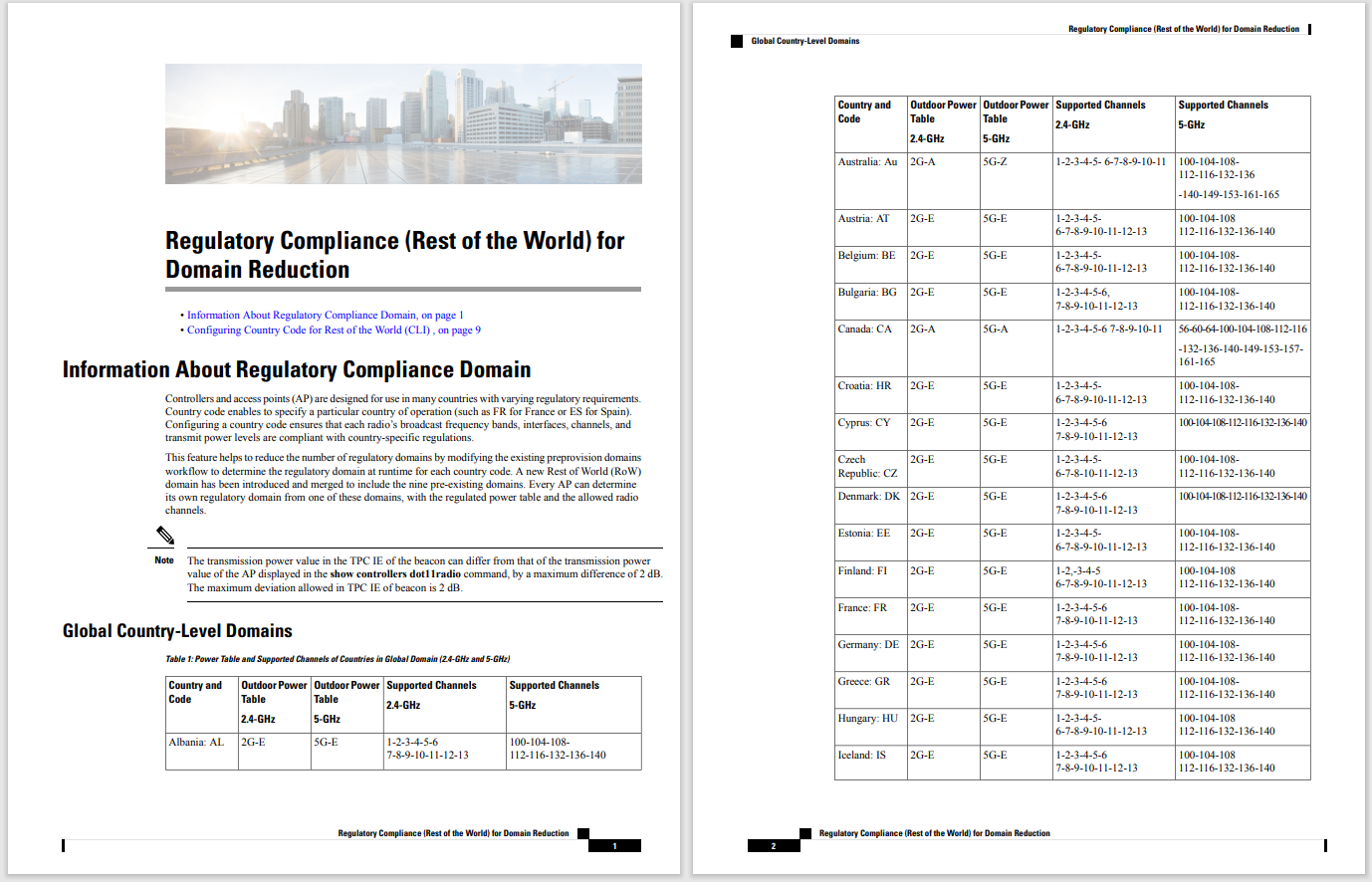

Scholars at Pepperdine University converted nephrology questions into prompts to feed into a bunch of large language models, including Llama 2 and GPT-4.

"GPT-4 performed exceptionally well and achieved human-like performance for the majority of topics," they write, achieving a score of 73.3%, just below the 75% rating that is a passing grade for a human who has to answer multiple-choice nephrology questions.

"The majority of the open-source LLMs achieved an overall score that did not differ from what would be expected if the questions were answered randomly," they write, with Llama 2 doing the best out of five open-source models, including Vicu?a and Falcon. The Llama 2 program was just above the level of random guessing (23.8%) with a score of 30.6%.

Also: Five ways to use AI responsibly

The study was a test of what is known in AI as "zero-shot" tasks, where a language model is used with no modifications and no examples of right and wrong answers. Zero-shot is an approach that is supposed to test "in-context learning", which is the ability of a language model to acquire new capabilities that were not in its training data.

in the test, the models -- Llama 2 and four other open-source programs, plus two commercial programs -- were each fed 858 nephrology questions from NephSAP, the Nephrology Self-Assessment Program, a publication of the American Society of Nephrology used by physicians for self-study in the field.

Also: Google's AI image generator finally rolls out to the public - how to try it

The authors had to perform significant data preparation to convert the plain-text files of NephSAP into prompts that could be fed into the language models. Every prompt contained the question in natural language and the multiple-choice answers. (The data set is posted for others to use on HuggingFace.)

And because GPT-4 and Llama 2 and the others produce lengthy text output as their answers in many cases, the authors also had to develop automatic techniques to parse the answers from each model for every question, and then compare the model's answers to the correct answers to automatically score the results.

There are lots of potential reasons why open-source models do poorly versus GPT-4, but the authors suspect an important reason is because Anthropic and OpenAI have baked-in proprietary medical data as part of the training of their programs.

"GPT-4 and Claude 2 were trained not only on publicly available data but also on third-party data," they write.

"High-quality data for training LLMs in the medical field often reside in nonpublic materials that have been curated and peer reviewed, such as textbooks, published articles, and curated datasets," note Wu and team. "Without negating the importance of the computational power of specific LLMs, the ability to access medical training data material that is currently not in the public domain will likely remain a key factor that determines whether performance of specific LLMs will improve in the future."

Also: MedPerf aims to speed medical AI while keeping data private

Clearly, with GPT-4 scoring two points below a human passing grade, there is vast room for improvement for all language models, not just open source.

Happily for the open-source crowd, efforts are underway that could help even the odds in terms of training data.

One of these efforts is the broad movement to what's called federated training, where language models are trained locally on private data, but then contribute the results of that training to an aggregate effort in the public cloud.

That approach can be a way to bridge the divide between confidential data sources in medicine and the collective push to make open-source foundation models stronger. One prominent endeavor in that area is the MedPerf effort of the ML Commons industry consortium, which began last year.

It's also possible that some commercial models will be distilled into open-source programs that will inherit specific medical competencies from the parent. For example, Google DeepMind's MedPaLM is an LLM that is tuned to answer questions from a variety of medical datasets, including a brand-new one invented by Google that represents questions consumers ask about health on the internet.

Also: Google's MedPaLM emphasizes human clinicians in medical AI

Even without training a program on medical knowledge, the output can be improved with "retrieval-augmented generation", which is an approach where LLMs seek outside input as they're forming their outputs to amplify what the neural network can do on its own.

Regardless of which approach wins, the open nature of Llama 2 and the other models affords the opportunity for many parties to make the programs better, unlike commercial programs such as GPT-4 and Claude 2, whose operations are at the sole discretion of their corporate owners.

Горячие метки:

3. Инновации

Горячие метки:

3. Инновации