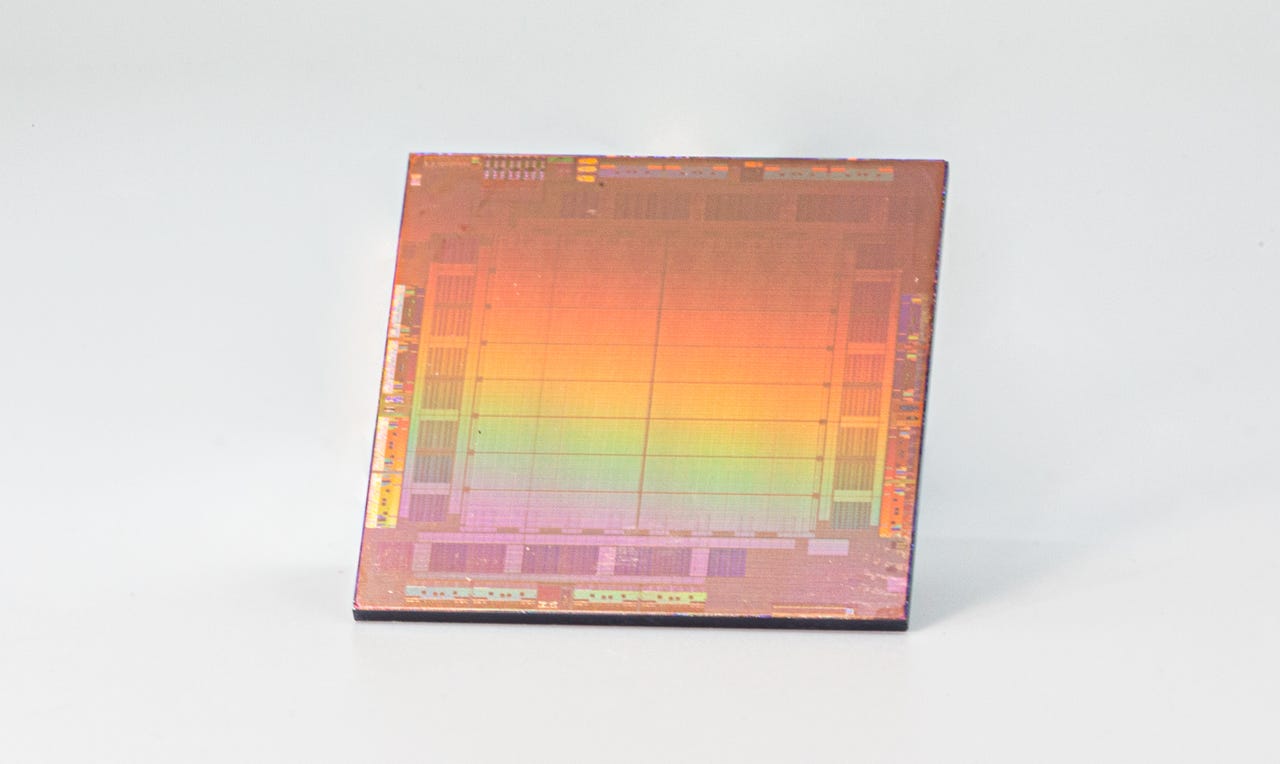

Meta on Thursday unveiled its first chip, the MTIA, which it said was optimized to run recommendation engines, and benefits from close participation with the company's PyTorch developers.

MetaMeta, owner of Facebook, WhatsApp and Instagram, on Thursday unveiled its first custom-designed computer chip tailored especially for processing artificial intelligence programs, called the Meta Training and Inference Accelerator, or "MTIA."

The chip, consisting of a mesh of blocks of circuits that operate in parallel, runs software that optimizes programs using Meta's PyTorch open-source developer framework.

Also: What is deep learning? Everything you need to know

Meta describes the chip as being tuned for one particular type of AI program: deep learning recommendation models. These are programs that can look at a pattern of activity, such as clicking on posts on a social network, and predict related, possibly relevant material to recommend to the user.

The chip is version one of what Meta refers to as a family of chips, and said it was begun in 2020. No detail was offered as to when future models of the chip will arrive.

Meta follows other giant tech companies that have developed their own chips for AI in addition to using the standard GPU chips from Nvidia that have come to dominate the field. Microsoft, Google and Amazon have all unveiled multiple custom chips over the past several years to handle different aspects of AI programs.

Also: Nvidia, Dell, and Qualcomm speed up AI results in latest benchmark tests

The Meta announcement was part of a broad presentation Thursday in which several Meta executives discussed how they are beefing up Meta's computing capabilities for artificial intelligence.

In addition to the MTIA chip, the company discussed a "next-gen data center" it is building that "will be an AI-optimized design, supporting liquid-cooled AI hardware and a high-performance AI network connecting thousands of AI chips for data center-scale AI training clusters."

Also: ChatGPT and the new AI are wreaking havoc on cybersecurity

Meta also disclosed a custom chip for encoding video, called the Meta Scalable Video Processor. The chip is designed to more efficiently compress and decompress video and encode it into multiple different formats for uploading and viewing by Facebook users. Meta said the MSVP chip "can offer a peak transcoding performance of 4K at 15fps at the highest quality configuration with 1-in, 5-out streams and can scale up to 4K at 60fps at the standard quality configuration."

Rather than rely on Nvidia GPUs, or CPUs from Intel, Meta said, "with an eye on future AI-related use cases, we believe that dedicated hardware is the best solution in terms of compute power and efficiency" for video. The company noted that people spend half their time on Facebook watching video, with over four billion video views per day.

Also: Meet the post-AI developer: More creative, more business-focused

Meta has for years hinted at its development of a chip, as when its chief AI scientist, Yann LeCun, was interviewed by in 2019 on the matter. The company kept silent about the details of those efforts even as its peers rolled out chip after chip, and as startups such as Cerebras Systems, Graphcore and SambaNova Systems arose to challenge Nvidia with exotic chips focused on AI.

The MTIA has aspects similar to chips from the startups. At the heart of the chip, a mesh of sixty-four so-called processor elements, arranged in a grid of eight by eight, echoes many designs for AI chips that adopt what is called a "systolic array," where data can move through the elements at peak speed.

The MTIA chip is somewhat unusual in being constructed to handle both of the two main phases of artificial intelligence programs, training and inference. Training is the stage when the neural network of an AI program is first refined until it performs as expected. Inference is the actual use of the neural network to make predictions in response to user requests. Usually, the two stages have very different requirements in terms of computer processing and are handled by distinct chip designs.

Also: This new technology could blow away GPT-4 and everything like it

The MTIA chip, said Meta, can be up to three times more efficient than GPUs in terms of the number of floating-point operations per second for every watt of energy expended. However, when the chip is tasked with more complex neural networks, it lags GPUs, Meta said, indicating more work is needed on future versions of the chip to handle complex tasks.

Meta's presentation by its engineers Thursday emphasized how MTIA benefits from hardware-software "co-design," where the hardware engineers exchange ideas in a constant dialogue with the company's PyTorch developers.

In addition to writing code to run on the chip in PyTorch or C++, developers can write in a dedicated language developed for the chip called KNYFE. The KNYFE language "takes a short, high-level description of an ML operator as input and generates optimized, low-level C++ kernel code that is the implementation of this operator for MTIA," Meta said.

Also: Nvidia says it can prevent chatbots from hallucinating

Meta discussed how it integrated multiple MTIA chips into server computers based on the Open Compute Project that Meta helped pioneer.

More details on the MTIA are provided in a blog post by Meta.

Meta's engineers will present a paper on the chip at the International Symposium on Computer Architecture conference in Orlando, Florida, in June, titled, "MTIA: First Generation Silicon Targeting Meta's Recommendation System."

Горячие метки:

Искусственный интеллект

3. Инновации

Горячие метки:

Искусственный интеллект

3. Инновации