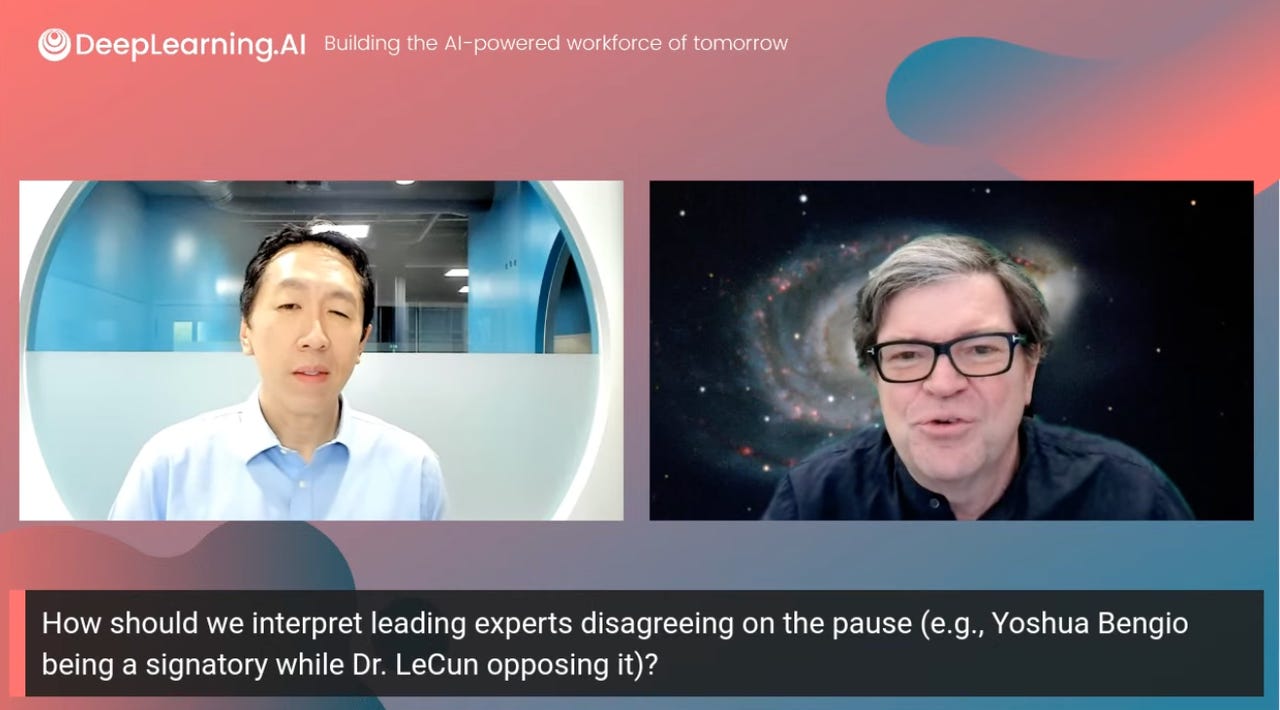

"A number of very smart people signed on to this proposal, and I think both Yann and I are concerned with this proposal," said Andrew Ng, left, founder and CEO of applied AI firm Landing.ai and AI educational outfit DeepLearning.ai. He was joined by Yann LeCun, chief AI scientist for Meta Platforms.

DeepLearning.aiDuring a live webcast on YouTube on Friday, Meta Platforms's chief AI scientist, Yann LeCun, drew a line between regulating the science of AI and regulating products, pointing out that the most controversial of AI developments, OpenAI's ChatGPT, represents a product, not basic R&D.

"When we're talking about GPT-4, or whatever OpenAI puts out at the moment, we're not talking about research and development, we're talking about product development, okay?" said LeCun.

Also: Tech leaders sign petition to halt further AI developments

"OpenAI pivoted from an AI research lab that was relatively open, as the name indicates, to a for-profit company, and now a kind of contract research lab mostly for Microsoft that doesn't reveal anything anymore about how they work, so this is product development; this is not R&D."

LeCun was holding a joint webcast with Andrew Ng, founder and CEO of the applied AI firm Landing.ai, and the AI educational outfit DeepLearning.ai. The two presented their argument against the six-month moratorium on AI testing that has been proposed by the Future of Life Institute andsigned by various luminaries.

The proposal calls for AI labs to pause -- for at least six months -- the training of AI systems more powerful than OpenAI's GPT-4, the latest of several so-called large language models that are the foundation for AI programs such as ChatGPT.

Also: With GPT-4, OpenAI opts for secrecy versus disclosure

A replay of the half-hour session can be viewed on YouTube.

Both LeCun and Ng made the case that delaying research into AI would be bad because it cuts off progress.

"I feel like, while AI today has some risk of harm -- I think bias, fairness, concentration of power, those are real issues -- it is also creating real value, for education, for healthcare, is incredibly exciting, the value so many people are creating to help other people," said Ng.

"As amazing as GPT-4 is today, building something even better than GPT-4 will help all of these applications to help a lot of people," continued Ng. "So, pausing that progress seems like it would create a lot of harm and slow down the creation of a lot of very valuable stuff that would help a lot of people."

Also: How to use ChatGPT to create an app

LeCun concurred, while emphasizing a distinction between research and product development.

"My first reaction to this, is, that calling for a delay in research and development amounts to a new wave of obscurantism, essentially," said LeCun. "Why slow down the progress of knowledge and science?"

"Then there is the question of products," continued LeCun. "I'm all for regulating products that get in the hands of people; I don't see the point of regulating R&D, I don't think this serves any purpose other than reducing the knowledge that we could use to actually make technology better, safer."

LeCun referred to OpenAI's decision, with GPT-4, to dramatically limit disclosure of how its program works, offering almost nothing of technical value in the formal research paper introducing the program to the world last month.

Also:GPT-4: A new capacity for offering illicit advice and displaying 'risky emergent behaviors'

"Partly, people are unhappy about OpenAI being secretive now, because most of the ideas that have been used by them in their products were not from them," said LeCun. "They were ideas published by people from Google, and FAIR [Facebook AI Research], and various other academic groups, etc., and now they are kind of under lock and key."

LeCun was aksed in the Q&A why his peer, Yoshua Bengio, head of Canada's MILA institute for AI, has signed the moratorium letter. LeCun replied that Bengio is particularly concerned about openness in AI research. Indeed, Bengio has said OpenAI's turn to secrecy could have a chilling effect on fundamental research.

"I agree with him" about the need for openness in research, said LeCun. "But we are not talking about research here, we are talking about products."

Also:'Pro-innovation' AI regulation proposal zeroes in on tech's unpredictability

LeCun's characterization of OpenAI's work as merely product development is consistent with prior remarks that OpenAI has made no actual scientific breakthroughs. In January, he remarked that OpenAI's ChatGPT is "not particularly innovative."

During Friday's session, LeCun predicted that free flow of research will produce programs that will meet or exceed GPT-4 in capabilities.

"This is not going to last very long, in the sense that there are going to be a lot of other products that have similar capabilities, if not better, within relatively short order," said LeCun.

"OpenAI has a bit of an advance because of the flywheel of data that allows them to tune it, but this is not going to last."

Горячие метки:

Искусственный интеллект

3. Инновации

Горячие метки:

Искусственный интеллект

3. Инновации