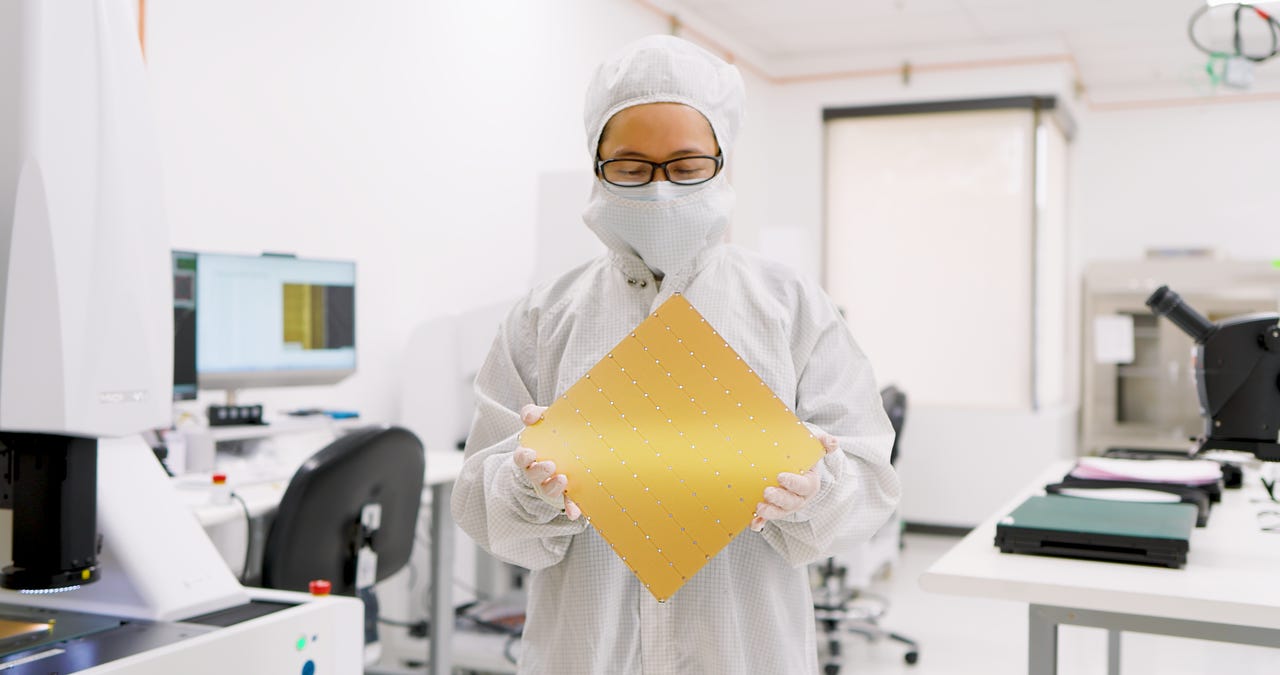

Cerebras operations technician Truc Nguyen holds Cerebras' WSE-3 chip. The size of almost an entire 12-inch semiconductor wafer, the chip is the world's largest, dwarfing Nvidia's H100 GPU. The AI startup notes that a single one of its CS-3 computers running the chip can crunch a neural network like GPT-4 with a hypothetical parameter count of 24 trillion.

The race for ever-larger generative artificial intelligence models continues to fuel the chip industry. On Wednesday, Cerebras Systems, one of Nvidia's most prominent competitors, unveiled the "Wafer Scale Engine 3," the third generation of its AI chip and the world's largest semiconductor.

Cerebras released the WSE-2 in April 2021. Its successor, the WSE-3, is designed for training AI models, meaning refining their neural weights, or parameters, to optimize their functionality before they are put into production.

"It's twice the performance, same power draw, same price, so this would be a true Moore's Law step, and we haven't seen that in a long time in our industry," Cerebras co-founder and CEO Andrew Feldman said in a press briefing for the chip, referring to the decades-old rule that chip circuitry doubles roughly every 18 months.

The WSE-3 doubles the rate of instructions carried out, from 62.5 petaFLOPs to 125 petaFLOPS. One petaFLOP refers to 1,000,000,000,000,000 (1 quadrillion) floating-point operations per second.

The size of almost an entire 12-inch wafer, like its predecessor, the WSE-3 has shrunk its transistors from 7 nanometers -- seven billionths of a meter -- to 5 nanometers, boosting the transistor count from 2.6 trillion transistors in WSE-2 to 4 trillion. TSMC, the world's largest contract chipmaker, is manufacturing the WSE-3.

Also:How AI firewalls will secure your new business applications

Cerebras has kept the same ratio of logic transistors to memory circuits by only slightly increasing the memory content of the on-chip SRAM, from 40GB to 44GB, and slightly increasing the number of compute cores from 850,000 to 900,000.

"We think we've got the right balance now between compute and memory," Feldman said in the briefing, which took place at the headquarters of Colovore, the startup's cloud hosting partner, in Santa Clara, California.

As with the prior two chip generations, Feldman compared the WSE-3's enormous size to the current standard from Nvidia, in this case, the H100 GPU, which he called "this poor, sad part here" in a slide deck image.

"It's 57 times larger," Feldman said, comparing the WSE-3 against Nvidia's H100. "It's got 52 times more cores. It's got 800 times more memory on chip. It's got 7,000 times more memory bandwidth and more than 3,700 times more fabric bandwidth. These are the underpinnings of performance."

"This would be a true Moore's Law step," said Feldman of the doubling of operations per second of the new chip, "and we haven't seen that in a long time in our industry."

Cerebras used the extra transistors to make each compute core larger, enhancing certain features, such as doubling the "SIMD" capability, the multiprocessing feature that affects how many data points can be processed in parallel for each clock cycle.

The chip comes packaged in a new version of the chassis and power supply, the CS-3, which can now cluster to 2,048 machines, 10 times as many as before. Those combined machines can carry out 256 exaFLOPS, a thousand petaFLOPS, or a quarter of a zetaFLOP.

Also:AI pioneer Cerebras is having 'a monster year' in hybrid AI computing

Feldman said its CS-3 computer with WSE-3 can handle a theoretical large language model of 24 trillion parameters, which would be an order of magnitude more than top-of-the-line generative AI tools such as OpenAI's GPT-4, which is rumored to have 1 trillion parameters. "The entire 24 trillion parameters can be run on a single machine," Feldman said.

To be clear, Cerebras is making this comparison using a synthetic large language model that is not actually trained. It's merely a demonstration of WSE-3's compute capability.

The Cerebras machine is easier to program than a GPU, Feldman argued. In order to train the 175-billion parameter GPT-3, a GPU would require 20,507 lines of combined Python, C/C++, CUDA, and other code, versus just 565 lines of code for the WSE-3.

Also: Cerebras and Abu Dhabi's M42 made an LLM dedicated to answering medical questions

For raw performance, Feldman compared training times by cluster size. Feldman said a cluster of 2,048 CS-3s could train Meta's 70-billion-parameter Llama 2 large language model 30 times faster than Meta's AI training cluster: one day versus 30 days.

"When you work with clusters this big, you can bring to every enterprise the same compute that the hyperscalers use for themselves," Feldman said, "and not only can you bring what they do, but you can bring it radically faster."

Feldman highlighted customers for the machines, including G42, a five-year-old investment firm based in Abu Dhabi, the United Arab Emirates.

Cerebras is working on a cluster of 64 CS-3 machines for G42 at a facility in Dallas, Texas, called "Condor Galaxy 3," the next part of a nine-part project that is expected to reach tens of exaFLOPS by the end of 2024.

Like Nvidia, Cerebras has more demand than it can fill at the moment, Feldman said. The startup has a "sizeable backlog of orders for CS-3 across enterprise, government, and international clouds."

Also: Making GenAI more efficient with a new kind of chip

Feldman also unveiled a partnership with chip giant Qualcomm to use the latter's AI 100 processor for the second part of generative AI, the inference process that consists of making predictions on live traffic. Noting that the cost to run generative AI models in production scales with the parameter count, Feldman pointed out that running ChatGPT could cost$1 trillion annually if every person on the planet submitted requests to it.

The partnership applies four techniques to reduce the cost of inference. Using what's called sparsity, which ignores zero-valued input, Cerebras' software eliminates as much as 80% of unnecessary computations, Feldman said. A second technique, speculative decoding, makes the predictions using a smaller version of a large language model, and then has a bigger version check the answers. Feldman explained that's because it costs less energy to check the output of a model than to produce the output in the first place.

A third technique converts the output of the model into MX6, a compiled version that needs only half the memory it normally would on the Qualcomm AI 100 accelerator. Lastly, the WSE-3's software uses network architecture search to select a subset of parameters to be compiled and run on the AI 100, which, again, can reduce compute and memory usage.

The four approaches increase the number of "tokens" processed on the Qualcomm chip per dollar spent by an order of magnitude, Feldman said, where a token can be a part of a word in a phrase or a piece of computer code for a developer's "co-pilot." In inference, "performance equals cost," Feldman noted.

"We radically reduce how much time you have to spend in thinking about how you go from your training parameters to your production inference by collaborating with Qualcomm and ensuring a seamless workflow," Feldman said.

The inference market is widely expected to become a greater focus of the arms race in AI as inference moves from data centers out to more "edge" devices, including enterprise servers and even energy-constrained devices such as mobile devices.

"I believe that more and more, the easy inference will go to the edge and Qualcomm has real advantage there," Feldman said.

Горячие метки:

3. Инновации

Горячие метки:

3. Инновации