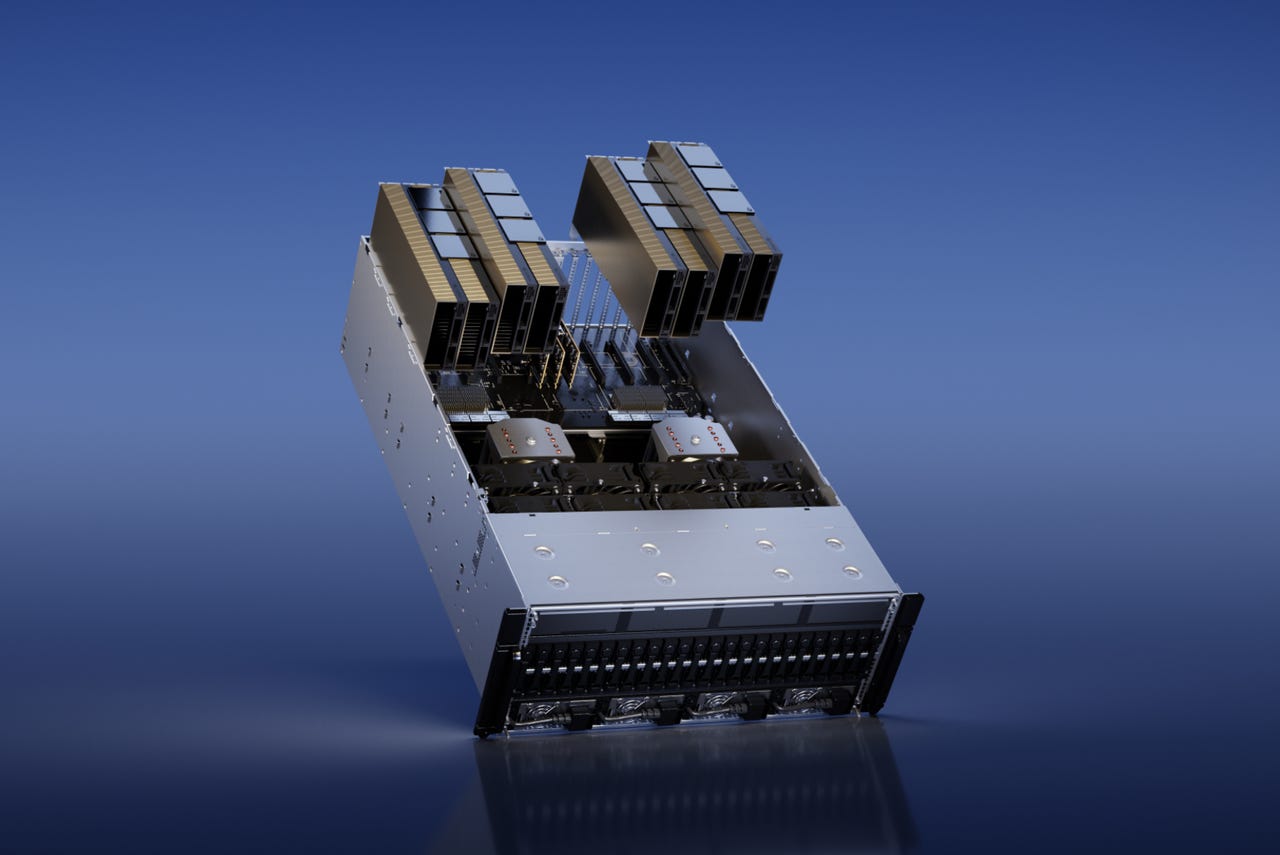

The H100 NVL, unveiled Tuesday by CEO Jensen Huang in his keynote to Nvidia's GTC conference, is specialized to handle prediction-making for large language models such as ChatGPT.

NvidiaReferring to the buzz about OpenAI's ChatGPT as an "iPhone moment" for the artificial intelligence world, Nvidia co-founder and CEO Jensen Huang on Tuesday opened the company's spring GTC conference with the introduction of two new chips to power such applications.

One, NVIDIA H100 NVL for Large Language Model Deployment, is tailored to handle "inference" tasks on so-called large language models, the natural language programs of which ChatGPT is an example.

Also: The best AI chatbots: ChatGPT and other interesting alternatives to try

Inference is the second stage of a machine learning program's deployment, when a trained program is run to answer questions by making predictions.

The H100 NVL has 94 gigabytes of accompanying memory, said Nvidia. Its Transformer Engine acceleration "delivers up to 12x faster inference performance at GPT-3 compared to the prior generation A100 at data center scale," the company said.

Nvidia's offering can in part be seen as a play to unseat Intel, whose server CPUs, the Xeon family, have long dominated the inference tasks, while Nvidia commands the realm of "training," the phase where a machine learning program such as ChatGPT is first developed.

Also: Google opened its Bard waitlist to the public. Here's how you can get on it

The H100 NVL is expected to be commercially available "in the second half" of this year, said Nvidia.

The second chip unveiled Tuesday is the L4 for AI Video, which the company says "can deliver 120x more AI-powered video performance than CPUs, combined with 99% better energy efficiency."

Nvidia said Google is the first cloud provider to offer the L4 video chip, using G2 virtual machines, which are currently in private preview release. The L4 will integrated into Google's Vertex AI model store, Nvidia said.

New inference chips unveiled by Nvidia on Tuesday include the L4 for AI video, left, and H100 NVL for LLMs, second from right. Along with them are existing chips, the L40 for image generation, second from left, and Grace-Hopper, right, Nvidia's combined CPU and GPU chip.

NvidiaIn addition to Google's offering, L4 is being made available in systems form more than 30 computer makers, said Nvidia, including Advantech, ASUS, Atos, Cisco, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Lenovo, QCT and Supermicro.

There were numerous references to ChatGPT's creator, the startup OpenAI, in Huang's keynote. Huang hand-delivered the first Nvidia DGX system to the company in 2016. And OpenAI is going to be using the Hopper GPUs in Azure computers running its programs, Nvidia said.

Also: How to use ChatGPT to write code

This week, as part of the conference proceedings, Huang is having a "fireside chat" with OpenAI co-founder Ilya Sutskever, one of the lead authors of ChatGPT.

In addition to the new chips, Huang talked about a new software library that will be deployed in chip making called cuLitho.

The program is designed to speed up the task of making photomasks, the screens that shape how light is projected onto a silicon wafer to make circuits.

Nvidia co-founder and CEO Jensen Huang gives his keynote at the opening of the four-day GTC conference.

NvidiaNvidia claims the software will "enable chips with tinier transistors and wires than is now achievable." The program will also speed up the total design time, and "boost energy efficiency" of the design process.

Said Nvidia, 500 of its DGX computers running its H100 GPUs can do the work of 500 NVIDIA DGX H100 systems to achieve the work of 40,000 CPUs, "running all parts of the computational lithography process in parallel, helping reduce power needs and potential environmental impact."

Also:AI will help design chips in ways humans wouldn't dare

The software is being integrated into the design systems of the world's largest contract chip maker, Taiwan Semiconductor Manufacturing, said Huang. It is also going to be integrated into design software from Synopsys, one of a handful of companies whose software tools are used to make the floorpans for new chips.

Synopsys CEO Aart de Geus has previously told that AI will be used to explore design trade-offs in chip making that humans would refuse to even consider.

A summary of Tuesday's announcements is available in an official Nvidia blog post.

Горячие метки:

Искусственный интеллект

3. Инновации

Горячие метки:

Искусственный интеллект

3. Инновации