A schematic of Meta's approach to what's called multi-token prediction. During training of the AI model, the inputs are fed in as usual, but instead of the AI model being trained to produce a single token as a response - the next most likely word, say - the model is trained to simultaneously generate four or more likely tokens.

Generative AI models such as GPT-4 have astounded us all with the ability to produce textual output that resembles thought, such as answers to multiple-choice questions. Reaching the "right" thought, however, such as answering the question, remains a deeper problem, as evidenced by the phenomenon of "hallucinations," where AI models will assert -- with apparent confidence -- false statements.

In a new work, scientists at Meta have tweaked large language models (LLMs) to produce output that could be more correct in a given situation, by introducing the notion of penalties for wrong answers.

Also: Meta's 'pruning' of Llama 2 model shows path to slimmer AI

The approach, known as "multi-token prediction," seeks to instill in an AI model a cost for less desirable answers. In that sense, it is analogous to popular approaches for establishing guardrails in AI such as "reinforcement learning from human feedback," or RLHF, a method OpenAI popularized to curb ChatGPT's most outrageous outputs.

(An "AI model" is part of an AI program containing numerous neural net parameters and activation functions that are the key elements for an AI program's functions.)

"Gains are especially pronounced on generative benchmarks like coding, where our models consistently outperform strong baselines by several percentage points," write the authors of "Better & Faster Large Language Models via Multi-token Prediction." Lead author Fabian Gloeckle, joined by colleagues at Facebook AI Research and collaborating institutions CERMICS ecole des Ponts ParisTech and LISN Universite Paris-Saclay, posted the paper last month on the arXiv pre-print server.

The authors' principal concern is that LLMs -- despite their impressive accomplishments -- don't achieve things such as reasoning or planning. The conventional approach of ChatGPT and the rest, called "next-token prediction," they write, "remains an inefficient way of acquiring language, world knowledge, and reasoning capabilities."

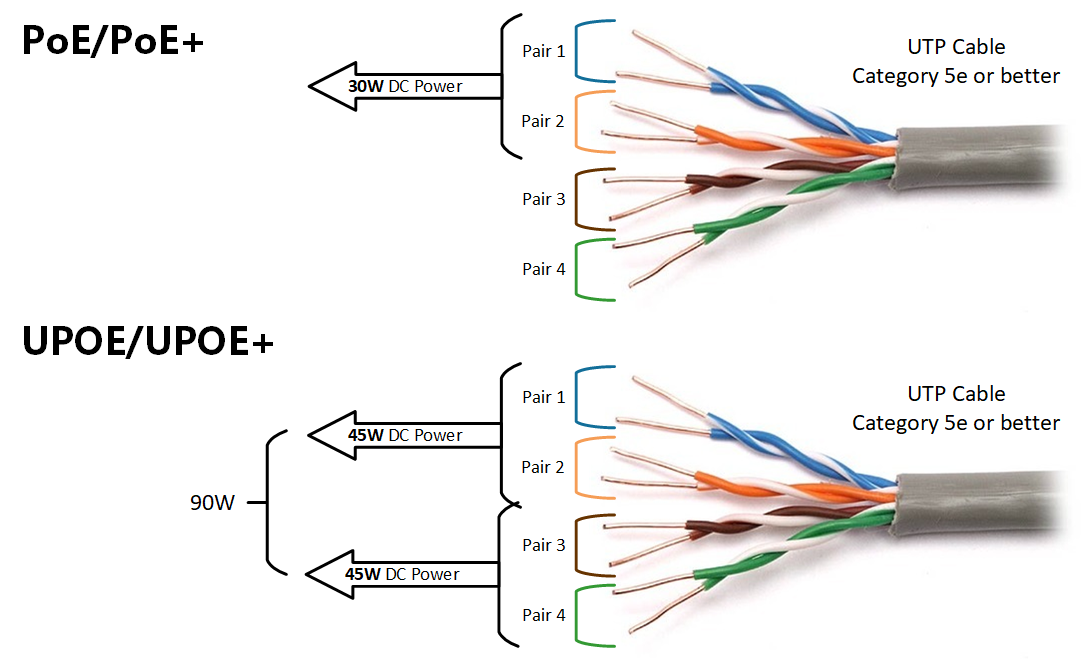

Instead of simple next-token prediction, where the AI model is trained to predict a single "token," such as a word or character in a string of tokens -- say, the next word in a sentence -- the Meta team's multi-token version is trained to predict multiple tokens of text simultaneously, each of whichcouldbe the correct completion of the sequence.

Technically, Gloeckle's team alter the basic structure of the LLM, known as a Transformer, so that it has four output "heads" that each produce a word or character or other symbol, rather than the standard single head.

The approach's immediate benefit is that it can be more memory-efficient when the AI model is live, making predictions for users, known as the inference stage of AI. Because multiple output heads can be working behind the scenes to try possibilities, a high degree of parallelism can happen. This form of "speculative decoding" means the multi-token approach "can speed up inference by a factor of 3

Горячие метки:

3. Инновации

Горячие метки:

3. Инновации