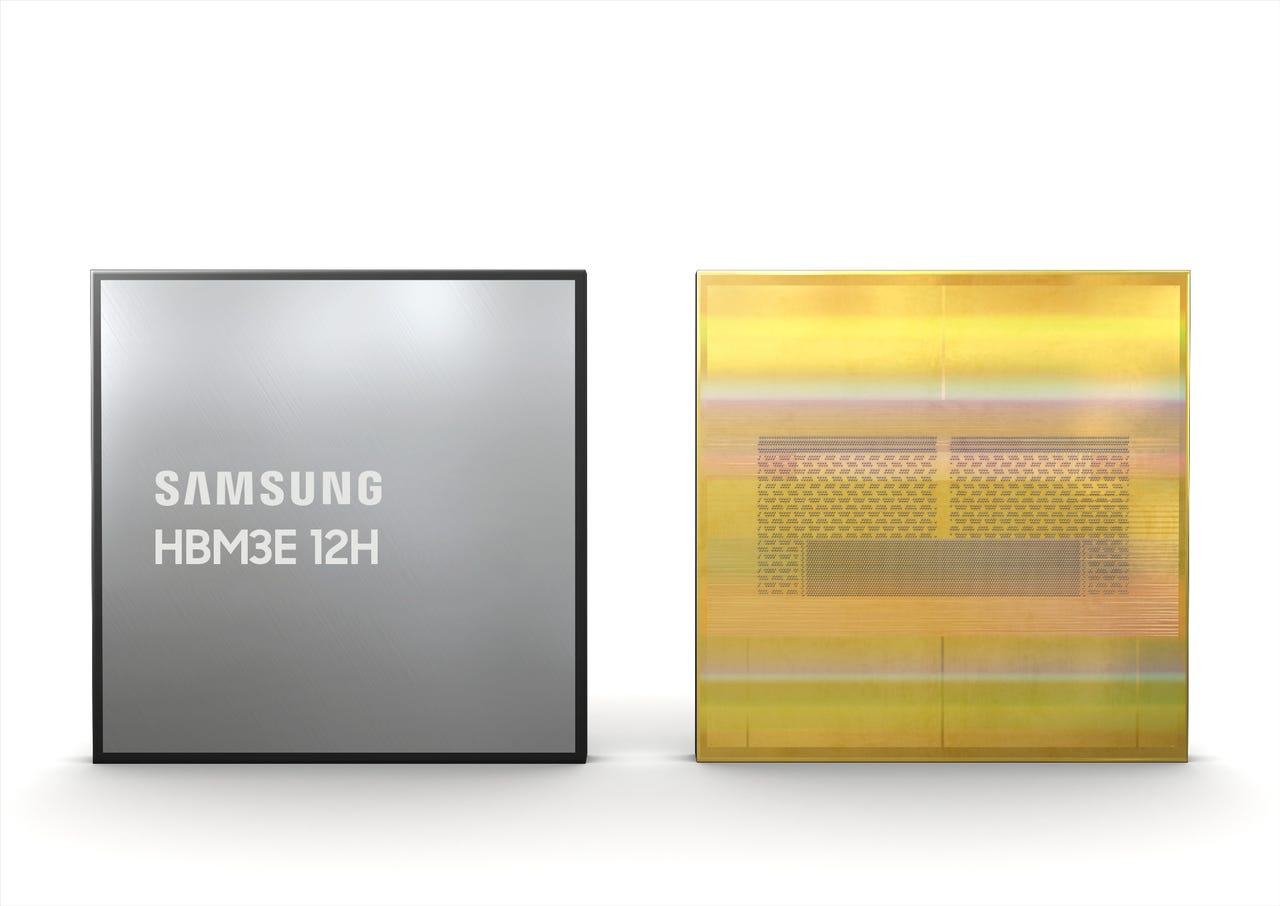

Image: Samsung

Samsung said on Tuesday that it has developed the industry's first 12-stack HBM3E DRAM, making it a high bandwidth memory with the highest capacity to date.

The South Korean tech giant said the HBM3E 12H DRAM provides a maximum bandwidth of 1,280GB/s and a capacity of 36GB. The bandwidth and capacity are all up by 50% compared to 8-stack HBM3, Samsung said.

Also: I tried the Samsung Galaxy Ring and it beats the Oura in 2 meaningful ways

HBMs are comprised of multiple DRAM modules stacked vertically, each called a stack or a layer. In the case of Samsung's latest, each DRAM module has a capacity of 24 gigabits (Gb), equivalent to 3 gigabytes (GB), and there are twelve of them.

Memory makers Samsung, SK Hynix, and Micron are competing to stack more while limiting the height of the stacks to make the chip as thin as possible with more capacity.

All three are planning to increase their production output of HBM this year, with the downcycle of the memory chip market seemingly over, and to meet the high demand from the popularity of AI, which has increased demand for GPUs -- especially those made by Nvidia -- that are paired with these HBMs.

According to Samsung, it applied advanced thermal compression non-conductive film (TC NCF) to make the 12-stack HBM3E have the same height as the 8-stack ones to meet package requirements. The film was thinner than the ones previously used and eliminated voids between the stacks and the gap between them was reduced to seven micrometers, the company said, allowing the 12-stack HBM3E to be vertically dense by over 20% compared to 8-stack HBM3.

Also: Samsung verifies CXL memory with Red Hat for wider data center adoption

Samsung said TC NCF also allowed the use of small and large bumps, where during chip bonding, small bumps are used in areas for signaling and the large ones in places that need heat dissipation.

The tech giant said the higher performance and capacity of HBM3E 12H will allow customers to reduce the total cost of ownership for data centers. For AI applications, the average speed of AI training can be increased by 34% and the number of simultaneous users of inference services can be boosted by 11.5 times compared to HBM3 8H, Samsung claimed.

The company has already provided samples of HBM3E 12H to customers and is planning to start mass production within the first half of this year.

Горячие метки:

3. Инновации

Горячие метки:

3. Инновации